Posts tagged “procyon”

Valve recently announced that Procyon is in the most-recent batch of titles to be given the green light for release on Steam! This is super-exciting news!

There are a few things that I want to add to Procyon before it’s ready for general Steam release: achievements, proper leaderboards, steam overlay support, etc. Basically, all of the steam features that make sense.

It’ll be a bit before it gets done, as we’re working on putting the finishing touches on Infamous: Second Son at work, so I’m a little dead by the time I get home at the moment. But once we’ve shipped, I’ll likely have the time and energy to get Procyon rolling out onto Steam.

Yeaaaaaaaah!

It’s been a ridiculously long time coming, but I’m finally breaking my radio silence on this poor, poor blog to let you know that Procyon has, in fact, finally been released!

It’s available on Desura and IndieCity:

(Way-late 2022 edit: no it’s not, anymore – both of those storefronts are gone. But it’s on Steam!)

Also check out Procyon’s nifty homepage: http://procyongame.com!

Thanks to everyone who helped get this thing out the door!

So. Here I am, back again after another long hiatus in blog posting. But now that Procyon is almost done, I figured I should share some news!

First and foremost, Procyon has now been posted to Steam Greenlight! And, to share some of the fun pieces of video, here’s Procyon’s trailer:

(More videos and stuff after the fold)

Next up, and just as fun, here’s a look at the in-game intro to Procyon:

Procyon now has a website! You can check it out at http://procyongame.com

Additionally, you can now check out Procyon on Facebook!

Finally, you can also listen to (and purchase) Procyon’s soundtrack on Bandcamp!

Anyway, that’s what I’ve got for now!

Is this thing on?

Wow.

So apparently I forgot how to update my blog for over a year, making the previous post’s title even more accurate than it should have been.

What’s been going on, you ask? I’ll tell you.

- I’ve switched jobs! Now I’m working at Sucker Punch Productions as a coder (working mostly on missions and the like). It’s a totally fantastic place to work. If you look ever-so-slightly close, you can find my name in the Infamous 2 credits 🙂

- I’ve entered Procyon into DreamBuildPlay and this year’s PAX 10 (no love from the judges, though)

- I’ve been lazy about updating my blog!

- And I’ve updated the holy craps out of Procyon!

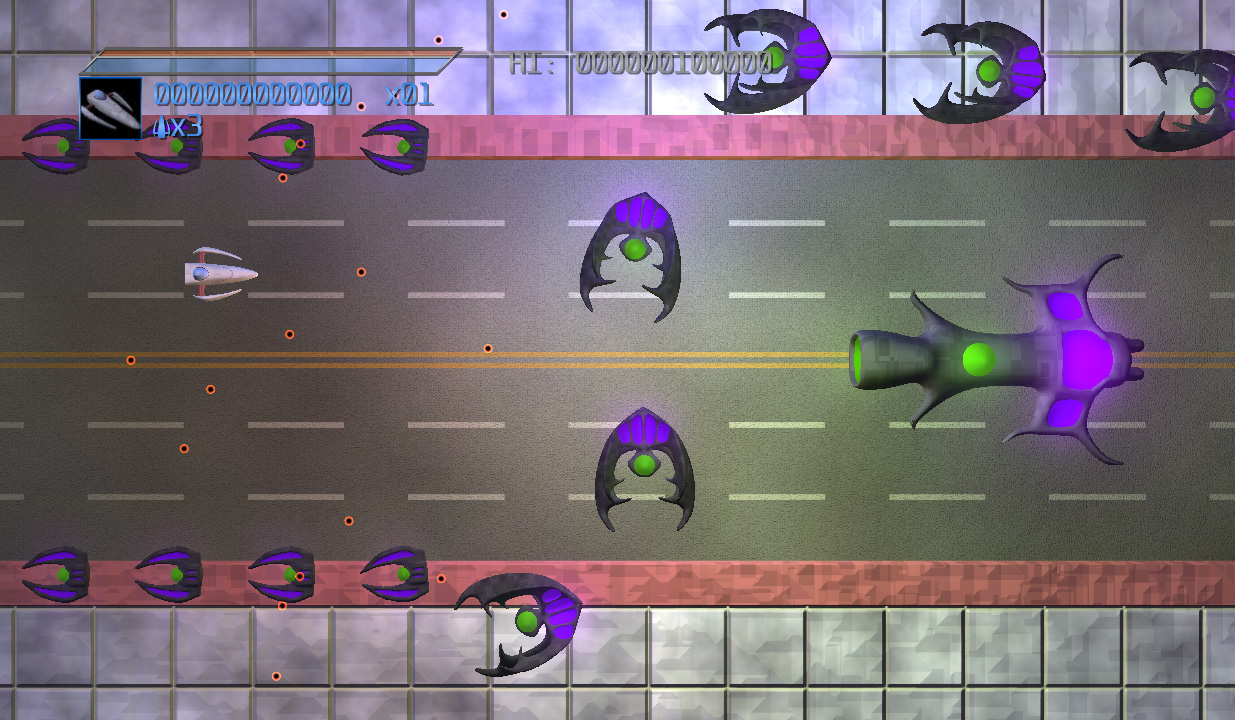

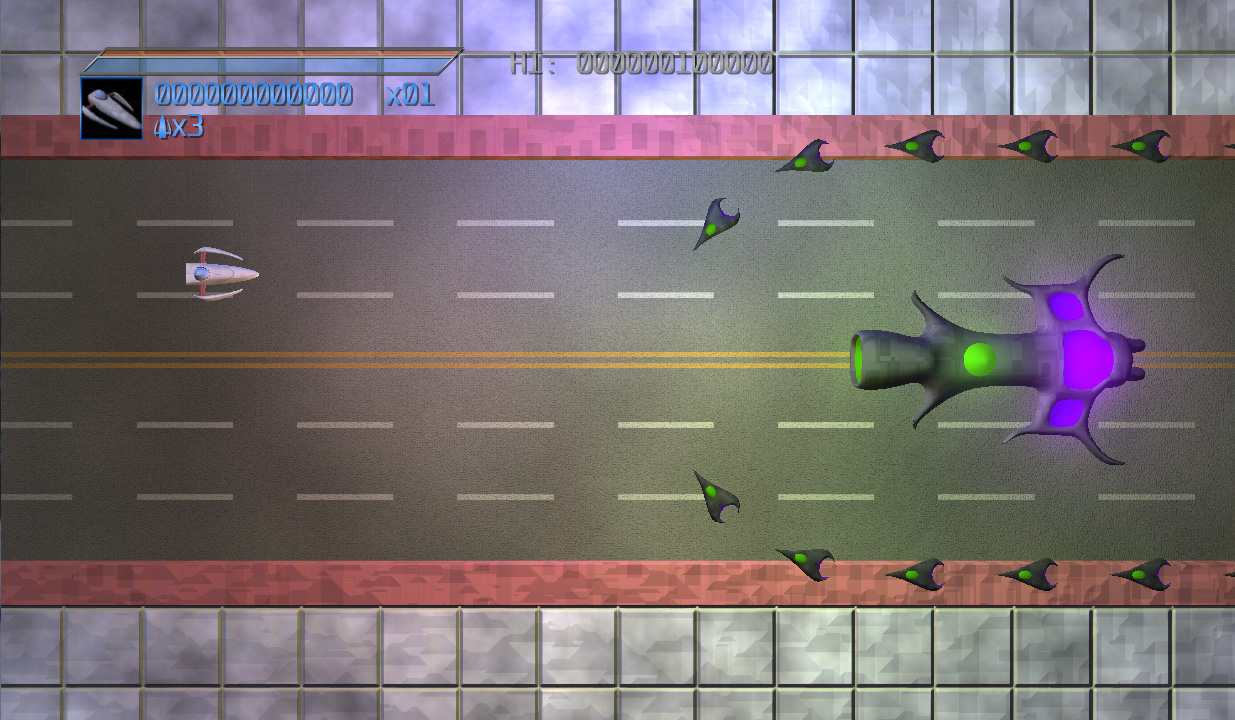

- Entirely new enemies (and enemy art)

- New levels

- Updated special effects

- A new level

- All sorts of new craziness

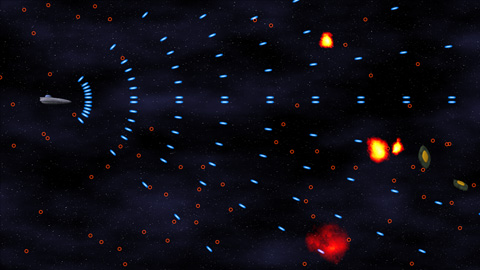

Okay, the list isn’t as long as last post’s, but I have been busy. Some new videos:

Hopefully I’ll update a bit more often than once per year. Sorry for the radio silence. I have a lot of things that I could write about, if only I’d take the time to do so.

I’ll go into more detail later, but here’s a SUPER quick synopsis of the last (yikes!) six months:

- Completed the demo build of Procyon

- Level 3!

- New title screen!

- Finished game flow!

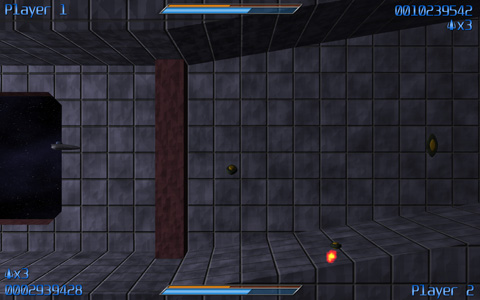

- Working local multiplayer!

- New control scheme!

- New font rendering mechanism!

- New tutorial!

- Another bullet point with an accompanying exclamation point!

- Sent it through several (sometimes-painful) rounds of playtest on the creator’s club forums, and got some great feedback (especially the feedback from one Jason Doucette of Xona Games, who gave some painful-to-hear but necessary criticism)

- Submitted to the PAX 10 competition

- Learned that I was not accepted into the PAX 10 competition 🙁

- Started work fixing the networked multiplayer that I broke while working towards the demo (I’d disabled it for the demo so that I could keep my focus elsewhere!

Anyway, that’s my short update. But fear not, for here, too, is a pair of videos!

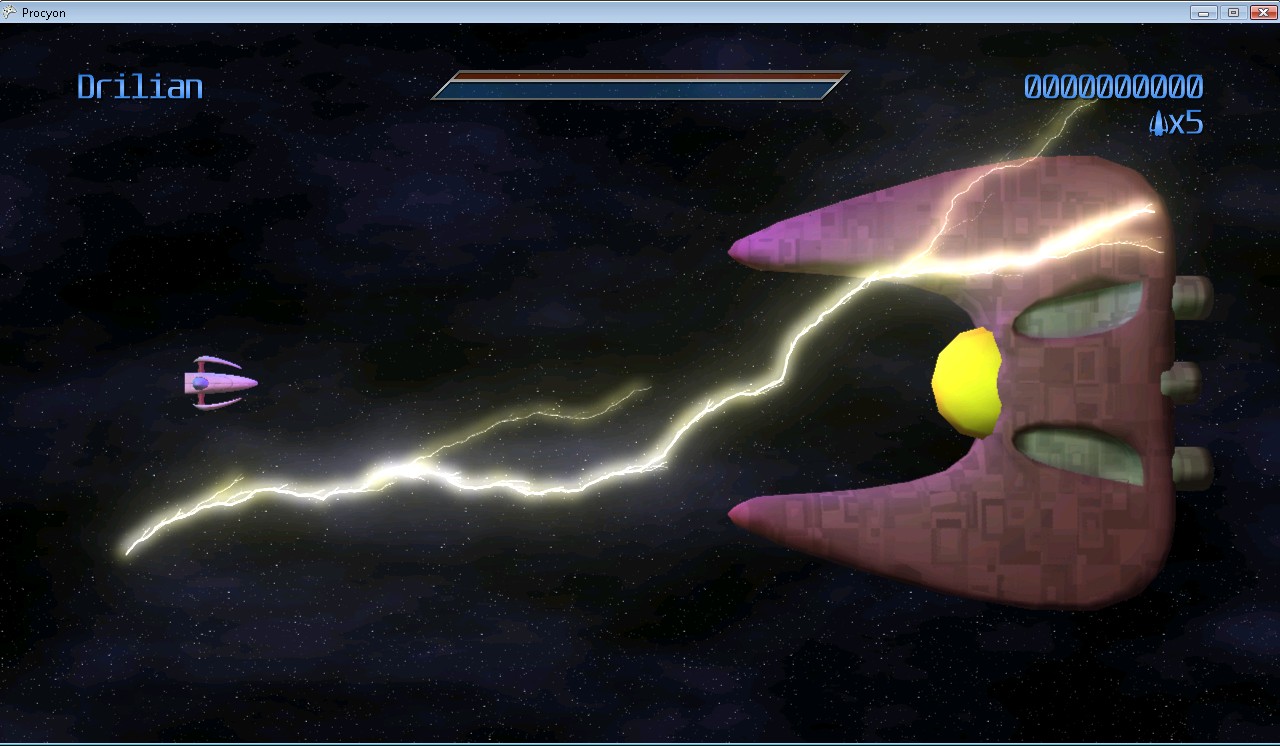

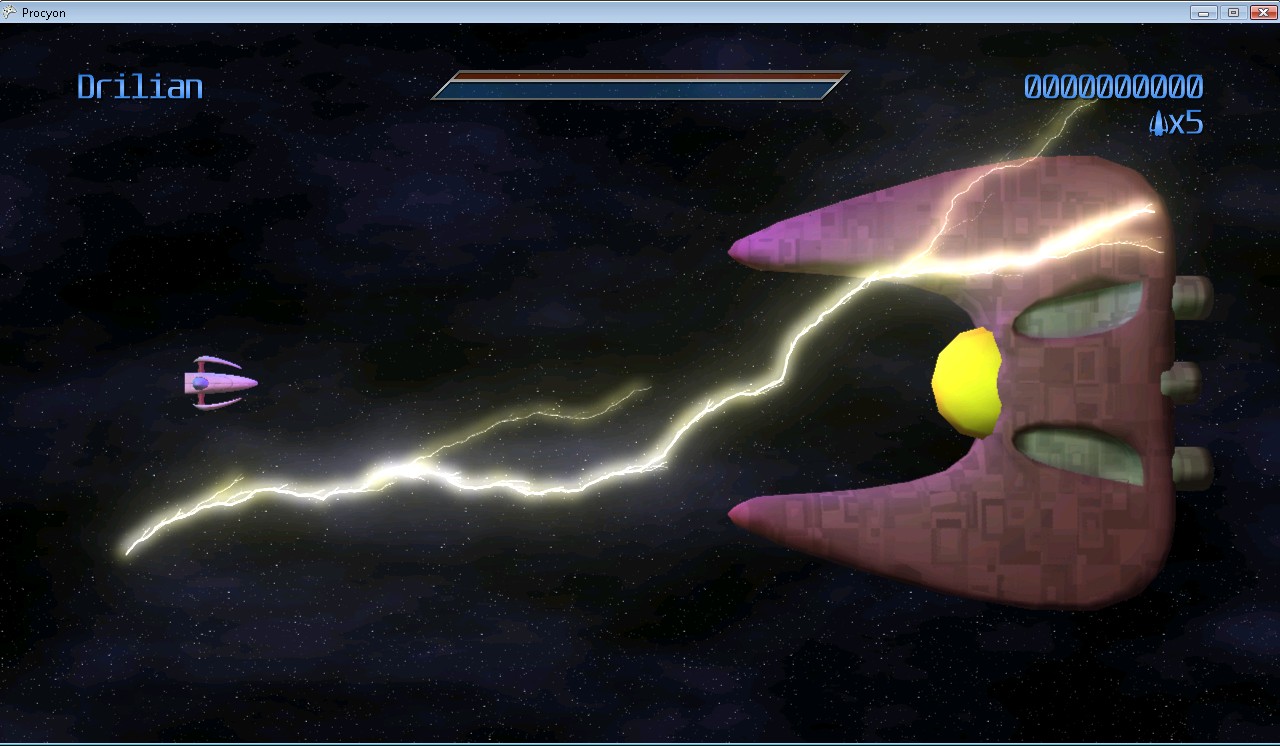

Just another quick update to show off a pair of videos of the in-progress level 2 boss.

First, note that the textures that I have on there currently are horrible, and I know this. It’s okay.

The first video was simply to test the independent motion of the various moving parts of the boss:

[video gone, sadly]

aaaaand the second shows off the missile-launching capabilities of the rear hatches (complete with brand-new missile effects and embarassing flights into the direct path of the missiles!):

[video also gone, also sadly]

That’s all for now!

Yeesh.

It’s been a while.

What have I been up to since early June? Quite a bit. On the non-game-development side of things: work’s been rather busy (still). Also, I now own (and have made numerous improvements on) a house! So that’s been eating up time. But, that’s not (really) what this site is about.

(Procyon and texture generator devlog below the fold)

Invisible Changes

Much of my time working on Procyon recently has been spent doing changes deep in the codebase: things that, unfortunately, have absolutely no reflection in the user’s view of the product, but that make the code easier to work with or, more importantly, more capable of handling new things. For instance, enemies are now based on components as per an article on Cowboy Programming, making it super easy to create new enemy behaviors (and combinations of existing behaviors). It took quite a few days of work to do this, and when I was finished, the entire game looked and played exactly like it had before I started. However, the upside is that the average enemy is easier to create (or modify).

Graphical Enhancements

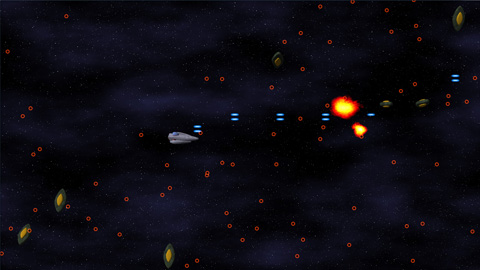

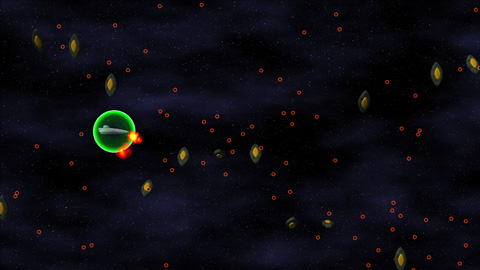

I’ve also made a few enhancements to the graphics. The big one is that I have a new particle system for fire-and-forget particles (i.e. particles that are not affected by game logic past their spawn time). It’s allowed me to add some some nice new explosion and smoke effects (among other things):

Also, I used them to add some particles at the origins of enemies firing beams:

Additionally, particles now render solely to an off-screen, lower-resolution buffer, which has allowed the game to run (thus far) at true 1080p on the Xbox 360.

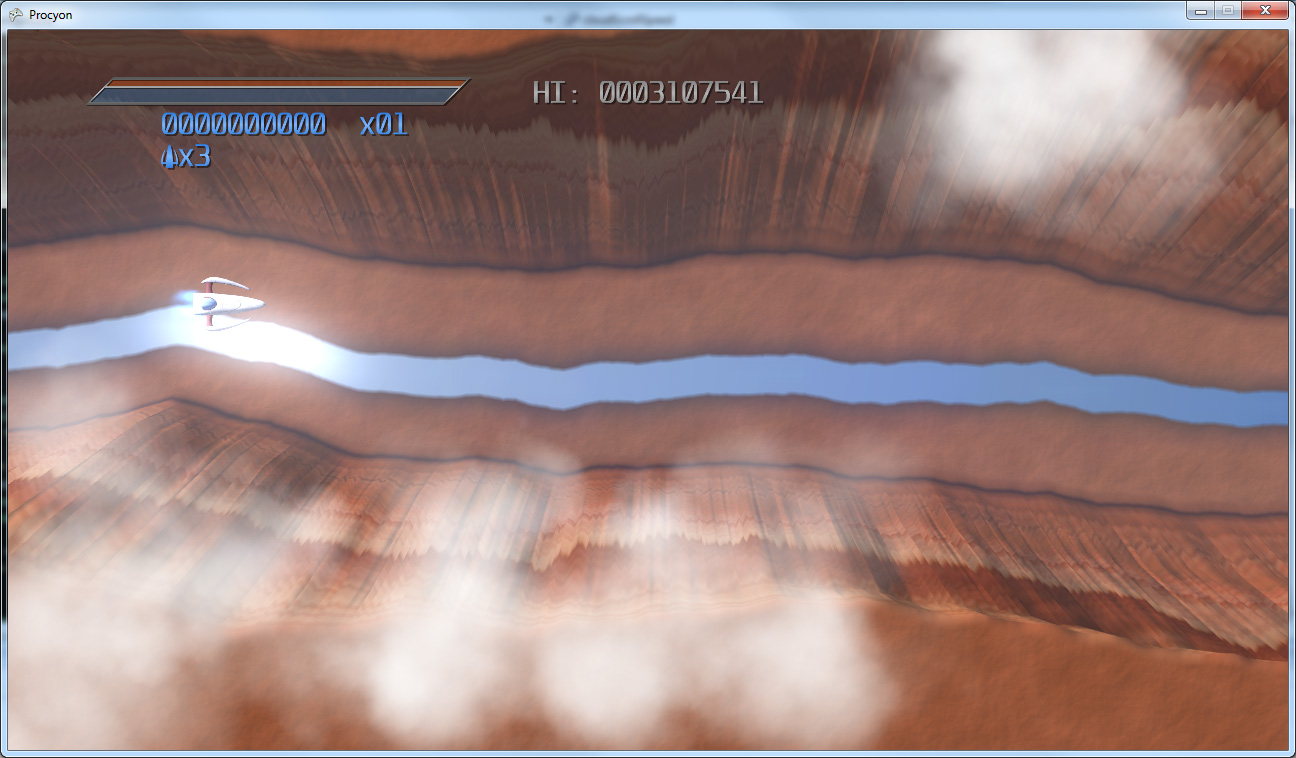

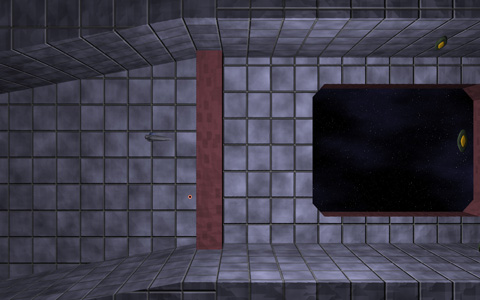

Also, I decided that the Level 1 background (in the first two images in this post) was hideously bland (if such a thing is possible), so I decided to redo it as flying over a red desert canyon (incidentally, the walls of the canyon are generated using the same basic divide-and-offset algorithm as my lightning bolt generator):

Texture + Generator = Texture Generator

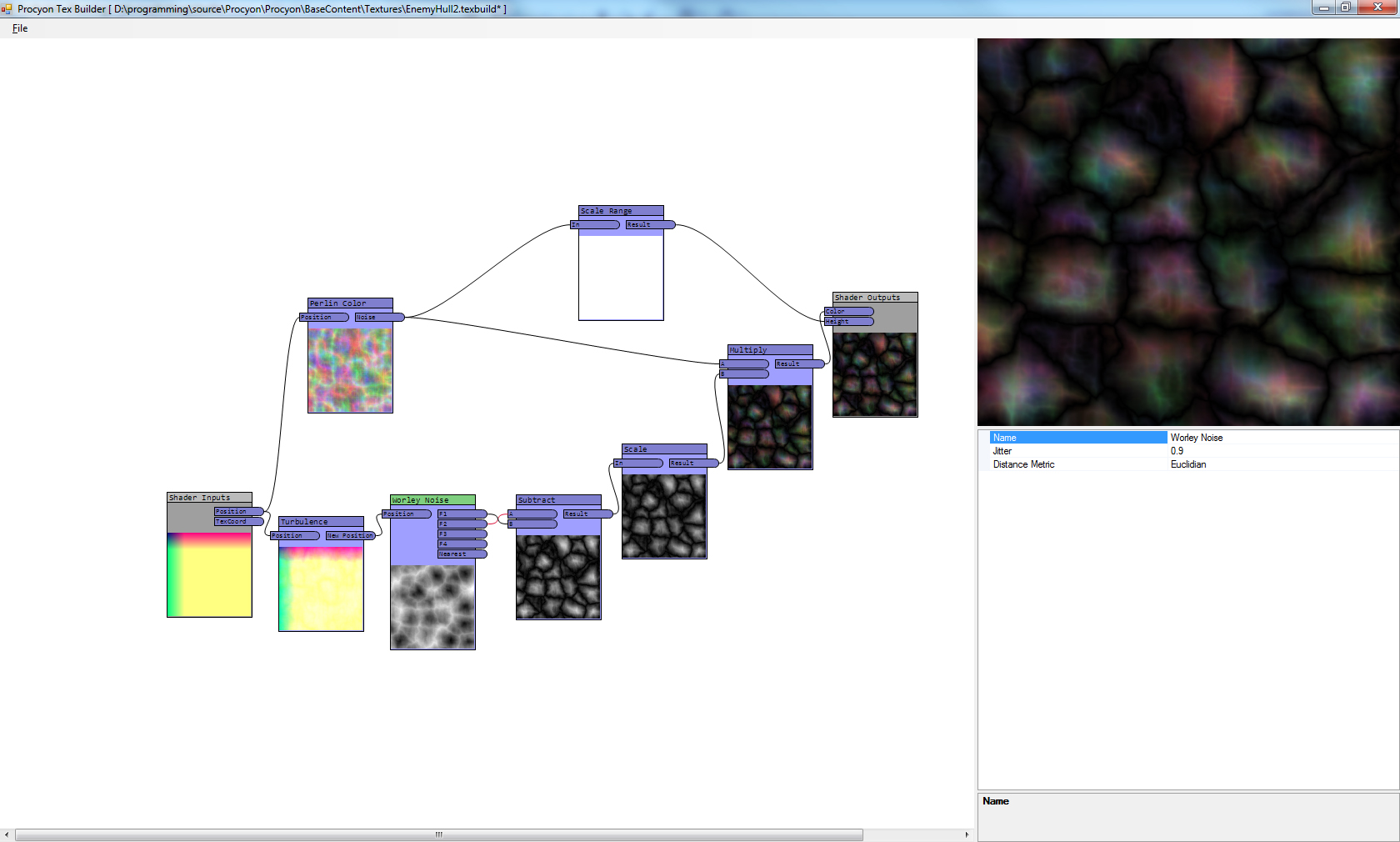

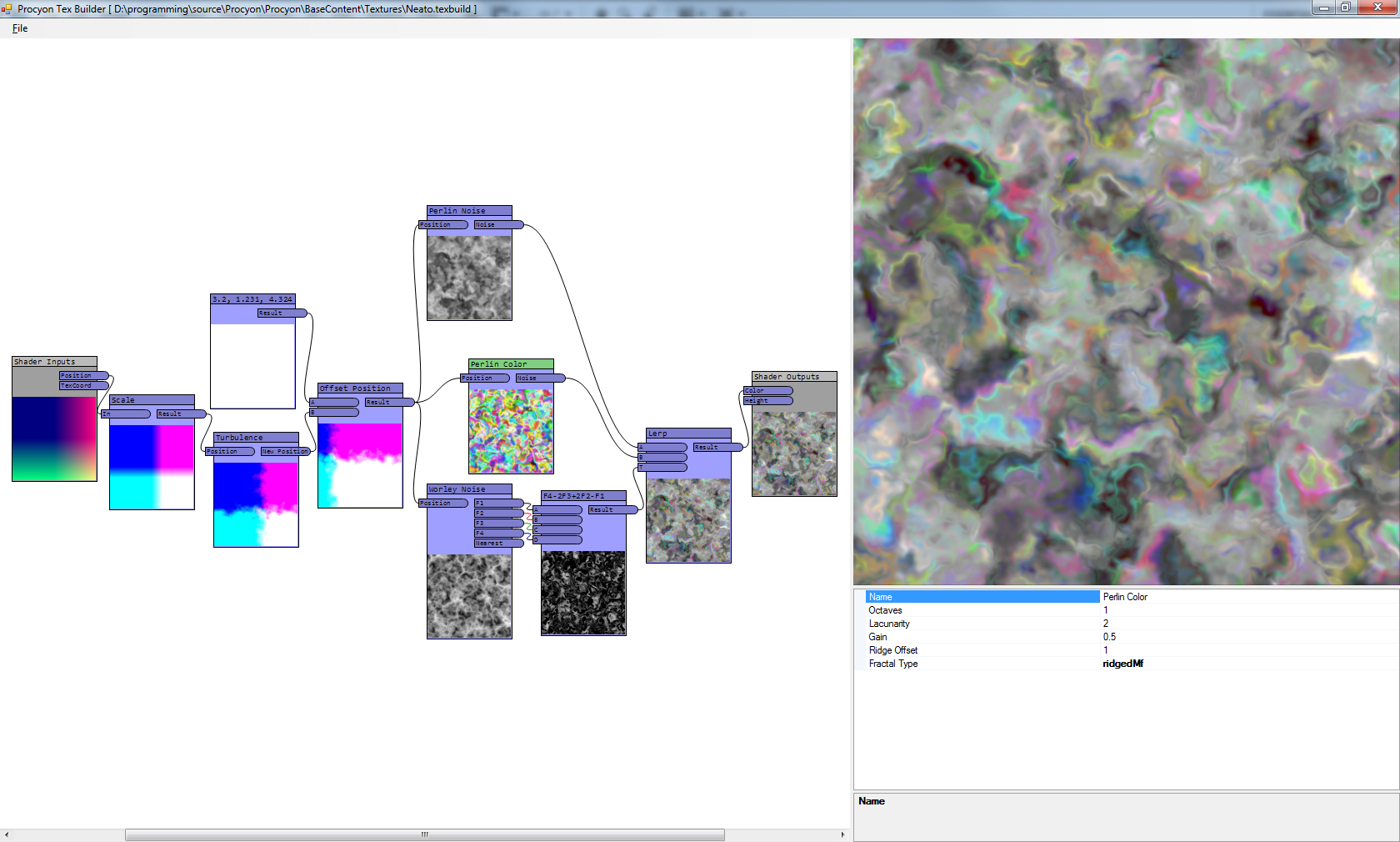

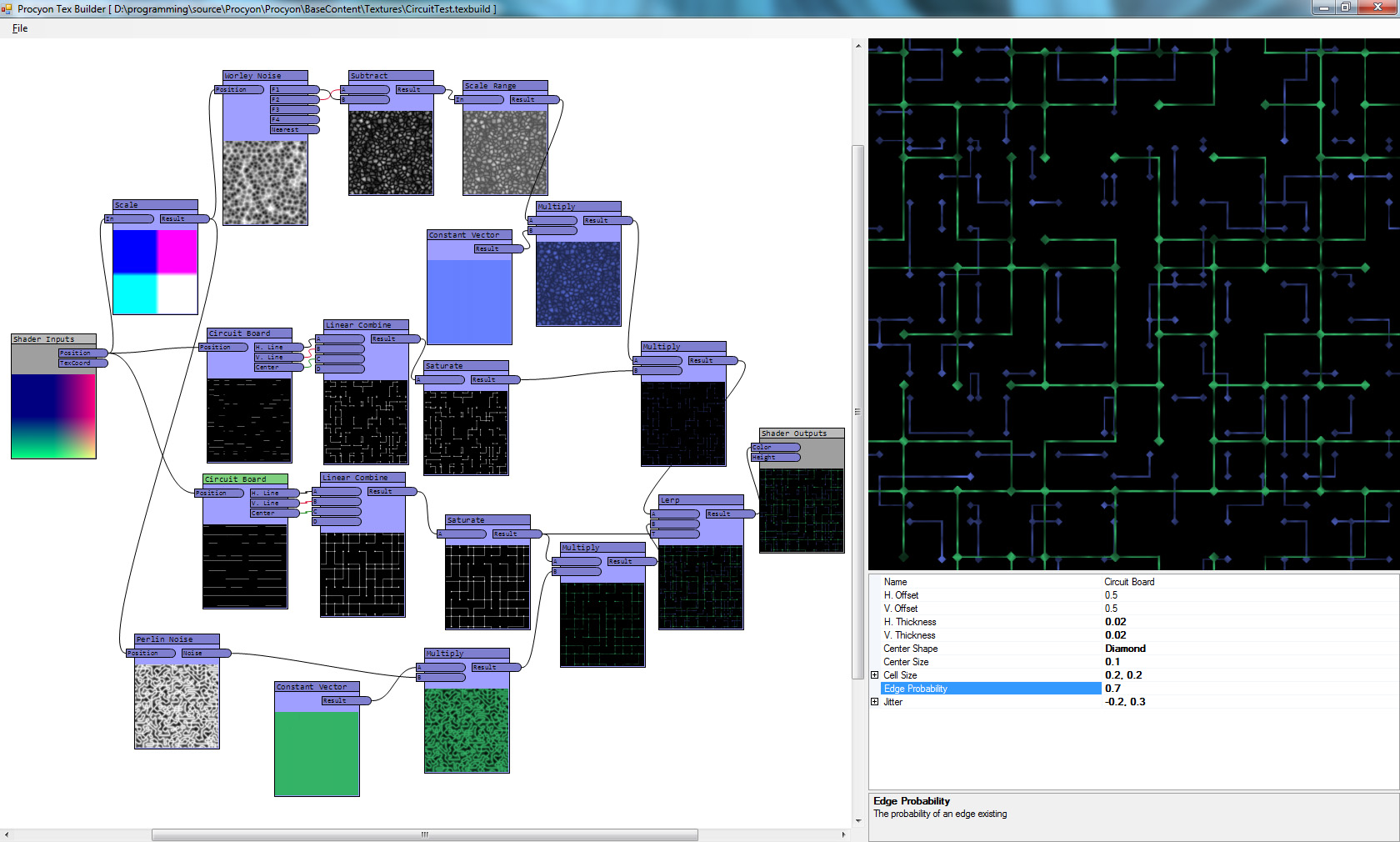

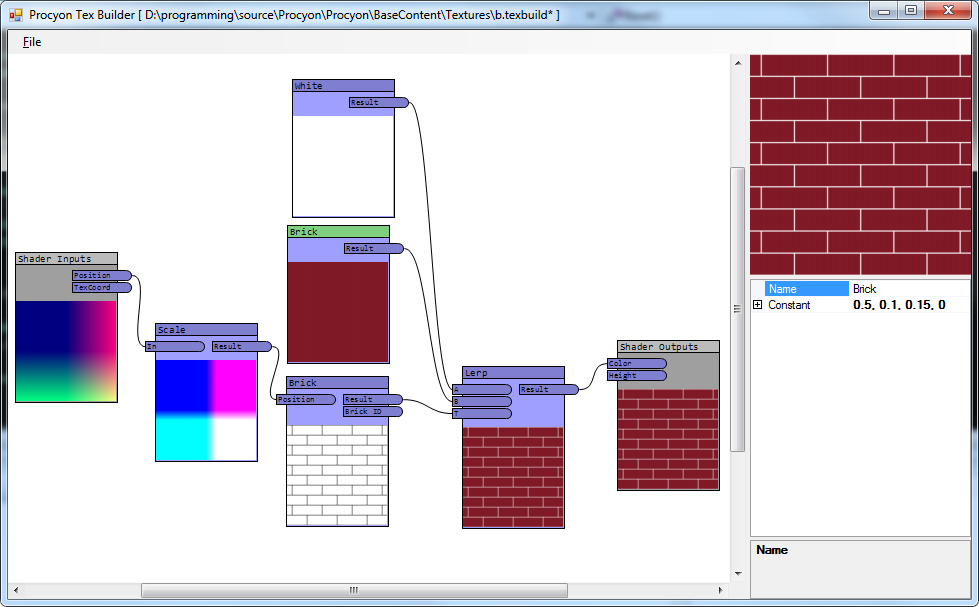

The big thing I’ve done, though, was put together a tool that will generate the HLSL required for my GPU-generated textures, so that I didn’t have to constantly tweak HLSL, rebuild my project, reload, etc. Now I can see them straight in an editor (though not, yet, on the meshes themselves – that is on my list of things to do still). It looks basically like this:

The basic idea is, I have a snippet of HLSL, something that looks roughly like:

Name: Brick

Func: Brick

Category: Basis Functions

Input: Position, var="position"

Output: Result, var="brickOut"

Output: Brick ID, var="brickId"

Property: Brick Size, var="brickSize", type="float2", description="The width and height of an individual brick", default="3,1"

Property: Brick Percent, var="brickPct", type="float", description="The percentage of the range that is brick (vs. mortar)", default="0.9"

Property: Brick Offset, var="brickOffset", type="float", description="The horizontal offset of the brick rows.", default="0.5"

%%

float2 id;

brickOut = brick(position.xy, brickSize, brickPct, brickOffset, id);

brickId = id.xyxy;

Above the “%%” is information on how it interacts with the editor, what the output names are (and which vars in the code they correspond to), and what the inputs and properties are.

Inputs are inputs on the actual graph, from previous snippets. Properties, by comparison, are what show up on the property grid. I simplified the inputs/outputs by making them always be float4s, which made the shaders really easy to generate.

Then, there’s a template file that is filled in with the generated data. In this case, the template uses some structure I had in place for the hand-written ones. The input and output nodes in the graph are based on data from this template, as well. In my case, the inputs are position and texture coordinates, and the outputs are color and height (for normal mapping).

So a simple graph like this:

…would, once generated, be HLSL that looks like this:

#include "headers/baseshadersheader.fxh"

void FuncConstantVector(float4 constant, out float4 result)

{

result = constant;

}

void FuncScale(float4 a, float factor, out float4 result)

{

result = a*factor;

}

void FuncBrick(float4 position, float2 brickSize, float brickPct, float brickOffset, out float4 brickOut, out float4 brickId)

{

float2 id;

brickOut = brick(position.xy, brickSize, brickPct, brickOffset, id);

brickId = id.xyxy;

}

void FuncLerp(float4 a, float4 b, float4 t, out float4 result)

{

result = lerp(a, b, t.x);

}

void ProceduralTexture(float3 positionIn, float2 texCoordIn, out float4 colorOut, out float heightOut)

{

float4 position = float4(positionIn, 1);

float4 texCoord = float4(texCoordIn, 0, 0);

float4 generated_result_0 = float4(0,0,0,0);

FuncConstantVector(float4(1, 1, 1, 1), generated_result_0);

float4 generated_result_1 = float4(0,0,0,0);

FuncConstantVector(float4(0.5, 0.1, 0.15, 0), generated_result_1);

float4 generated_result_2 = float4(0,0,0,0);

FuncScale(position, float(5), generated_result_2);

float4 generated_brickOut_0 = float4(0,0,0,0);

float4 generated_brickId_0 = float4(0,0,0,0);

FuncBrick(generated_result_2, float2(3, 1), float(0.9), float(0.5), generated_brickOut_0, generated_brickId_0);

float4 generated_result_3 = float4(0,0,0,0);

FuncLerp(generated_result_0, generated_result_1, generated_brickOut_0, generated_result_3);

float4 color = generated_result_3;

float4 height = float4(0,0,0,0);

colorOut = color.xyzw;

heightOut = height.x;

}

#include "headers/baseshaders.fxh"

Essentially, each snippet becomes a function (and again, if you look at FuncBrick compared to the brick snippet from earlier, you can see that the input comes first (and is a float4), the properties come next (With types based on the snippet’s declaration), followed finally by the outputs. Once each function is in place, the shader itself (in this case, the actual shader is inside of headers/baseshaders.fxh included at the end, but that simply calls the ProceduralTexture function just before that) calls each function in the graph, storing the results in unique values, and passes those into the appropriate functions later down the line.

More Content

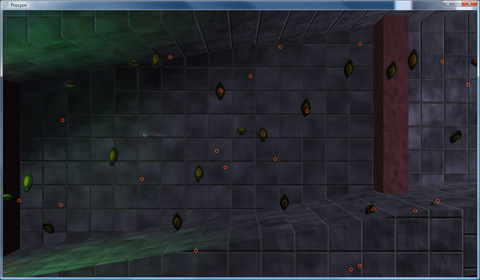

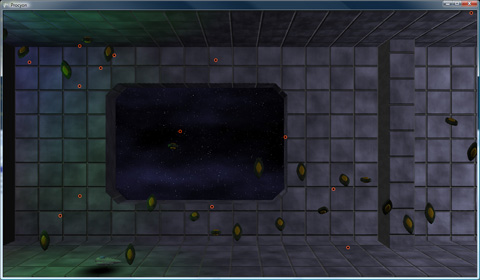

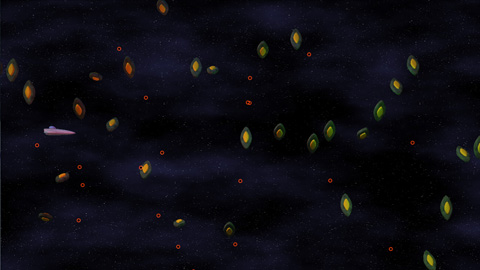

In addition to all of that, I have also completed the first draft of level 2’s enemy layout (including the level’s new enemy type), and am working on the boss of the level:

Once I finish the boss of level 2, I’ll start on level 3’s gameplay (and boss). Once those are done and playable, I’ll start adding the actual backgrounds into them (instead of them being a simple, static starfield).

Bug Tracking

Finally, I’ve started an actual bug tracking setup, based on the easy-to-use Flyspray, which I highly recommend for a quick, easy-to-setup web-based bug/feature tracking thing.

In the case of the database I have, I’ve linked to the roadmap, which is the list of the things that have to be done at certain stages. I am going to try, this year, to submit my game for the PAX 10 this year, so I have my list of what must be done to have a demo ready (the “PAX Demo” version), and the things that, additionally, I’d really like to have ready (the “PAX Demo Plus” version). Then, of course, there’s “Feature Complete”, which is currently mostly full of high-level work items (like “Finish all remaining levels” which is actually quite a huge thing) that need to be done before the game is in a fully-playable beta stage.

In short: I’m still cranking away at my game, just more slowly than I’d like.

In my previous two posts, I started looking into what it would take to code the networking for my game, and came up with a first draft, before realizing that floating point discrepancies between systems totally threw my lockstepping idea for a loop.

Lockstepping With Collisions

In order to solve the issue with different systems having different floating point calculation results, I decided to somewhat revamp the network design, and really leverage the fact that I don’t care so much about cheating – you could never get away with a networking scheme like this in a competetive game.

(Latency, packet loss, and bandwidth use analysis below the fold)

- First, the timing of the scrolling, player bullet fire rates, enemy fire rates, etc were all modified to be integer-based instead of float-based. There are no discrepencies in the way that integer calculations happen from machine to machine, so the timings of things like enemy spawning, level scrolling, etc. are now all perfectly in sync, frame by frame.

- Next, when the client detects a collision between entities, it sends a message to the server (which, you may recall, is running on the same system as the client – each machine gets one) notifying it of a collision. These messages are also synced across the network.

- Thus, whenever an enemy does on any one client, it dies on both servers on the same tick (that is, the “first” client relative to the game clock to detect a collision determines when the collision took place). This means that there are no longer any timing differences between deaths on each server (and if a collision happens to be missed by one client, but hits on the other, it will eventually reach the other client).

- Because players were already sending “I died!” flags as part of their network packets, these were already always perfectly in sync, so no change was needed there.

As an added bonus, since all collision detections are now handled by the client (and communicated to the server), the server never has to do any collision detection calculations on its own, which eases up on the CPU load somewhat (previously, the client and server were both doing collision calculations). All the server has to do now is apply collisions reported by either the local client or the remote client.

So now, the actual mechanism by which the game keeps in sync from system to system is set, but how does it handle the three main enemies of the network programmer?

Network Gaming’s Most Wanted #1 – Latency

“Lag” is one of the most dreaded words in the network gaming world. It’s always going to be present – nothing can communicate across the internet faster than the speed of light (and, because of transmission over copper, it’s really more like a sluggish 2/3rds the speed of light!). Routers and switches also add their own delays to the mix. According to statistics gathered by Bungie from Halo 3’s gameplay, most gamers (roughly 90%)end up with a round-trip latency of less than (or equal to) 250ms. That is, it takes a quarter of a second for data to go from System A to System B and back to A. That’s a long time for a fast-action networked game! Thankfully, because messages sent from system to system in this game’s network design are never dependent on messages from the other, nothing has to round trip, so the latencies can effectively be halved, making the system much better at handling lag (because, quite frankly, there’s just less of it!)

As discussed previously, because the client can run ahead of the server and, thus, process local player input immediately, there’s no latency in what the player presses and what actually happens on-screen. But what about how the remote player’s actions look? With a ping under 100ms, there are next to zero visible discrepancies on the system. That is, low-ping games are virtually indistinguishable from locally-played games.

At around the 400ms ping mark, it does start to become obvious that things aren’t quite right – due to the interpolation of remotely-shot bullets, they accelerate up to a certain part of the screen until they reach their known location then slow down to normal speed, which is fairly noticeable (I’m still trying to smooth this out a touch). When enemies get too close to the remote player as it fires, due to the delay, the bullets will collide with the enemy, but the enemy will live longer than it appears it should (because the local client does not reliably know that the remote bullet is actually still alive, it can’t deal actual damage to the enemy, it has to wait for the server to confirm).

Above 1-2 seconds of latency, all bets are off – the local player will find the game still perfectly playable, but the movements of the remote player will be completely erratic, and remotely fired bullets won’t act at all like they should. But, since 90% of gamers have much lower latencies, this is not really an issue. For the majority of gamers, the game will look and play pretty close to how it would if both players were in the same room.

Network Gaming’s Most Wanted #2 – Packet Loss

Latency’s lesser-known brother is packet loss, which is where data sent from one machine to another never makes it (due to routing hardware failure, power outage, NSA interception, alien abduction, etc). On a standard internet connection, you can generally assume that about 10% of the packets that you send will get lost along the way. Also, just because you send Packet A before Packet B doesn’t mean they’ll arrive in the right order – a machine might get a packet sent later before one that was sent earlier.

With the XNA runtime, there are four different methods that you can send packets with (obviously you can mimic these with any networking setup, but I’m using XNA so it’s my frame of reference here):

- Unreliable – the other system will get these in potentially any order, or it may not even get them at all. The name says it all – you can’t rely on these packets. This is probably not the best option to use.

- In-Order – These packets are for data for which you really only need the most recent data; you only care about the most recent score, for instance – not what the score was in a previous packet. Thus, these packets contain extra version information so that the XNA runtime can ensure that packets that arrive out of order don’t reach you. As soon as a new packet comes in, it becomes available to the game. If a packet that’s older than the most recent one comes in, it’s discarded. You immediately get new ones at the cost of never getting older ones. For many games, this is a perfect scenario.

- Reliable – These packets will always arrive. When the XNA runtime receives one of these, it sends an acknowledgement to the other system that it received it. If the system that sent it doesn’t receive such an acknowledgement, it’ll resend (and resend and resend and…) until it finally arrives at the destination. Packets sent reliably are not vulnerable to packet loss; if you send it, as long as the connection remains valid you know it will reach the destination eventually. However, these packets may not arrive in the proper order (you may receive Packet C before Packets A or B).

- Reliable, In-Order – On the surface, this sounds like the best choice! These packets always arrive in the right order, and they always arrive! That is, you will always get Packets A, B, C, and D, in that order. There’s a hidden downside, though: If the game recieves Packet C, but has not yet received Packets A or B, it has to hold onto that packet until both A and B arrive, which, if they need to be resent, can really ratchet up the latency. Any one packet that needs to be resent will hold up the whole line until it arrives. Clearly, this type of packet should only be used when absolutely necessary – for normal gameplay, it’s better to use In-Order or Reliable on their own.

Eventually, I decided to send packets in the Reliable way, but not In-Order. But, to minimize the amount that the game has to wait for resent packets to arrive, each packet contains eight frames worth of input/collision data. That way, as long as one out of every string of 8 packets arrives, the server will have all of the relevant information to sync up to that point. And if, for some reason, 8 packets in a row are all lost in transmission, they’ll be resent and make it eventually anyhow.

To handle this, the game essentially has a list of frames that it’s received data for (8 of which come in with each packet).

- For each frame that a packet contains, if the frame already been simulated by the server (a frame from the past), that frame is ignored.

- Similarly, if the frame is already in the list, it’s ignored.

- If it’s not a past frame and it isn’t already in the list, add it to the list (in order – the list is sorted from earliest to latest).

- After this is done, if the next frame that the server needs to simulate is in the list, remove it from the list and go! Otherwise, wait until it is.

The game doesn’t care which order the frames are received in – as long as it has the next one in the list, it’ll be able to continue on. Because of the redundancy, it rarely has to wait on a resend due to packet loss. In fact, using XNA’s built-in packet loss simulation (thank you, XNA team!), the packet loss has to be increased to over 90% before the latency of the simulation starts to increase (hypothetically, the magic number is above 87.5% packet loss – greater than 7/8 packets lost).

The disadvantage of this system is that it does add to the bandwidth use, as each packet now contains an average of eight times as much data as it would normally, which brings us to…

Network Gaming’s Most Wanted #3 – Bandwidth

Ah, bandwidth. There’s no point in having a low-latency connection between two systems if the game requires too much bandwidth for the connection to keep up. Because the Xbox Live bandwidth requirement is 8KB/s (that’s kilo_bytes_), that became my goal as well.

This is where I overengineered my system a bit. I was estimating, as a worst case, an average of 10 collisions per frame. With packet header overhead, voice headset data, and everything, with 8 frames worth of data in each packet, I expected to be just BARELY below the 8KB/s limit.

When I finally got the system up and running, it turned out the game was using less than 4KB/s. The average amount of collisions per frame is closer to TWO than it is to 10 (unfunny side note: I had the right number, but the wrong numerical base. My answer was perfect in binary), even with a lot of stuff going on (though an individual frame may have many more, there are usually large spaces between collisions as waves of bullets smack into enemies). The most I’ve been able to get it up to with this system is about 5KB/s, which means the game still has a delightful 3KB/s of breathing room. I think I’ll keep it that way!

Final Remarks

Hopefully this has been an informative foray into the design of a network protocol for an arcade-style shoot-em-up game. I’m no network professional – in fact, this is the first network design I’ve ever done, so I’m sure people who do this stuff for a living are laughing at my pathetic framework. If anyone has any suggestions as to how I might improve my network model, I’m all ears – while it works pretty well, I’m always open to ideas!

In my previous post, I weighed the advantages and disadvantages of my game vs. a standard FPS with regard to networking. After doing so, I came up with an initial network design.

The biggest issue, personally, was dealing with the causality of the network game. Each player gets information about the other with a delay, so neither player is ever seeing exactly the same set of circumstances (perfectionism and network lag don’t mix very well). I think Shawn Hargreaves describes it best in one of his networking presentations: he says to treat each player’s machine as a parallel world. They’re not exact, but the idea is to try to make them look as close as possible. It doesn’t matter if things don’t happen exactly the same, but you don’t ever want the following conversation to occur:

Player 1: “Wow, can you believe I killed that giant enemy at the last second? That was amazing!”

Player 2: “…What giant enemy?”

Procyon’s Initial Design

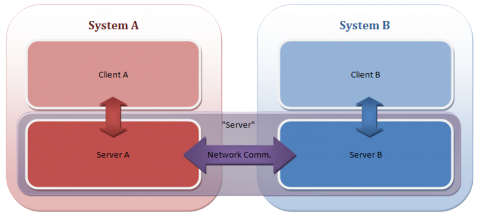

The design I started with was a hybrid of both the peer-to-peer lockstep and client-server models.

Basically, within each system there is a client/server pair. In addition, each server runs in lock-step with the other, so that they both always tick with the exact same player inputs for a given frame, keeping the two machines’ servers perfectly in sync. Since the only data being sent across the network is player inputs, bandwidth use was ridiculously low. And since nothing ever has to round-trip from the client to the server and back, the system still gets the effectively-halved-ping that a pure lockstep setup gets. Also, because the client and the server are running on the same machine, communications between the two (mostly, the server notifying the client of events that happened based on the other player’s input) becomes very trivial – the server can just call callbacks to the client, and no sort of RPC mechanism is necessary.

Ignoring the clients for a moment, the servers are a very traditional lockstep system. Each normal tick, the player input is polled and then sent across the network. Once the server receives the input from the remote system for the next frame, it ticks forward. As you can imagine, each machine’s server is a bit lagged, because it has to wait for inputs across the network. That’s where the client comes in.

Each system also runs a client, which runs ahead of the server. This client always ticks every (in Procyon’s case) 60th of a second, processing the local player’s inputs right away, and running prediction on the remote player based on the last set of inputs and the last known position that the server knows about. That way, the local player’s ship always reacts right away to player inputs (on the client, which is represented on-screen).

Essentially:

- The current local player’s input is polled and sent to the server.

- The input is also sent immediately across the network to the other machine.

- The client ticks using this input – the position and actions of the remote player are predicted based on the last-known input and position from the server.

- If the server has the remote input for the next frame that it has to do (which is always an eariler frame than the client), it also ticks (no prediction necessary on the server, as it has up-to-date information about both players for the given frame it’s simulating). Again, there is a server on both machines, but they’ll both end up with the exact same simulation.

(Mostly-) Deterministic Enemies

Here’s where one of the advantages of the game comes into play. In my last post, I mentioned that enemies are deterministic in their movements. This is actually a key part of the networking. What it means is, there is no prediction required for simulating enemy behaviors ahead of the server. For instance, say that the client is currently 5 frames ahead of the server. While the client is ticking frame 450, the server is only on 445 (because it hasn’t received network input for frame 446 from the other machine yet). Even though the server hasn’t simulated enemy movements yet, their movements are very strictly defined, so the client doesn’t have to guess – it knows exactly where an enemy is on frame 450. Consequently, the client running ahead of the server is not a problem with regards to enemy positions – when you see an enemy in a specific place on the screen, you know that’s exactly where it will be on the server when the server finally simulates that same frame.

Unless the other player kills it first.

That’s right – there is exactly one case in which an enemy’s behavior is non-deterministic, and it’s most-easily described as follows: your local client is currently simulating frame 450, the server has simulated frame 445. You see a large cannon ship start to charge up its beam weapon for a massive attack. However, the server gets remote player input from frame 446 (the next frame it has to simulate), and when simulating it, realizes that the other player dealt the killing shot to the ship. Suddenly, the client’s view of that enemy is wrong – it should have died four frames prior.

In essence, the only way that a client’s view of an enemy is ever wrong is if the other player has killed it in the past, and the server hasn’t caught up. This is a very important property: the only time, ever, that an enemy is not where you see it as is when it’s not anywhere at all (because it already died). However, this is where it starts to get tricky.

Most of the time, it’s not a big issue. The client kills the enemy as soon as the server tells the client that the enemy should be dead, and it just dies a few frames too late. But when the enemy has fired bullets (or any other type of weapon), suddenly there’s a problem. What has to happen in that case is that the client has to remove the bullets that shouldn’t really have been spawned. With reasonable pings, the bullets will generally be close enough to the enemy’s death explosion that they won’t really be noticeable, they’ll blink up in the middle of the explosion and disappear by the time it’s done. With larger lag times, though, bullets for dying enemies might spontaneously disappear. Ah, lag. How networked games love you so.

The real problem, though, is when, using the frame numbers above, you crash your ship into an enemy on tick 450 that actually died on tick 446. What then? You crashed into something that shouldn’t even have been there! After much internal debate, I decided there’s a really simple way to arbitrate this: on your screen, you crashed, so you still get the consequences. Even though you hit something (either a should-have-been-dead enemy or a bullet spawned from such an enemy) that shouldn’t have even been there, you still ran into something on your screen, and still pay the price. So, in addition to player inputs that get sent to the server (and across the network), the client also sends a flag that says “I died!”

As an added bonus, the server no longer needs to calculate collisions against either player – when a player dies, the client signals it (This plays into another advantage of my game – in a competetive multiplayer game, you would never, ever trust a client machine to tell you whether or not its player got hit by something).

Random Number Generation

One issue has to do with random number generation. Obviously, when connecting the two machines across the network, the machines have to agree on a random number seed so that random numbers generated on each are the same. In fact, not only do the machines on either side of the network have to have the same random number seeds, but the client and server also have to start with the same seeds, or they’ll get out of sync (a machine getting out of sync with itself is always funny).

However, what happens when an enemy (Smallship 5) shoots a bullet in a random direction, and then dies “in the past” based on a server correction? On the server(s), this random number generation never happened. On the client, however, a bullet was shot, and a random number was created. With a single random number generator, that means all of the random numbers from that point on on the client are going to be incorrect. Thankfully, there’s an easy way around that.

Give every enemy its own random number generator!

I found a very lightweight (and very high-quality) random number generator: Marsaglia’s mutliply-with-carry generator (or, if you prefer, the infinitely more difficult-to-read Wikipedia version). This generator only requires two unsigned integers for each generator to do its magic, so there wasn’t much overhead to hand one of these off to each enemy. So, the initial random number seed is decided upon before the level begins to load. As the level begins to load, and enemies are created, a new generator is created for each entity, using random seeds generated from the original generator. This way, each enemy gets its own generator, and if an enemy ever generates any extra random numbers before it dies, it doesn’t affect any of the other enemies at all! Problem solved.

Remote Player Bullets

Again, pretend the client is ticking frame 450. When the server reaches 445, it notices that the remote player fired a bullet. So it notifies the client: “Hey, 5 frames ago, the other player fired a shot!.”

- The client knows that it needs to spawn a new remote player bullet.

- It also knows exactly where that bullet will be at the current tick (since it was spawned at tick 445, but it’s now tick 450, it can tick the bullet forward 5 frames).

- It does not, however, know that the bullet will actually survive until tick 450. It’s possible that anywhere from tick 446 until 449, that it might hit something.

Even though it knows exactly where the bullet should be, it doesn’t display it there. It actually starts the bullet in front of its current estimate of where the remote player is (the predicted position), and interpolates it into the correct spot. That way, remote player’s bullets don’t seem to appear way out in front of the remote player, they still start right where they seem like they should.

The client knows exactly where the bullet should be, but not that it’s actually there (because it might have died on, say, tick 448). So while it does collision detection against enemies, if the remote bullet hits an enemy, it doesn’t actually do any damage on the client – it just removes the bullet. If it turns out it dealt damage after all, the server will eventually send a correction to the client.

However, eventually, the server reaches frame 450 (when the client first learned about the bullet). If the bullet is still alive on the server, then we know that it never hit anything from frame 445 until then, so it was a live bullet when the client found out about it. Also, if it’s still alive on the client (it didn’t collide with anything while the server caught up to frame 450), that means the client knows that the bullet is still alive.

Now that it knows that it has a bullet that is guaranteed to still be alive, and is at the exact position that it’s supposed to be, the bullet can flip from being treated as a remote bullet to being treated exactly like a locally-fired bullet. Basically, this bullet now will deal damage and act exactly like a bullet fired by this machine’s own player! It’s one less thing that will act different on the other machines, further strengthening the illusion that both players are seeing the same thing.

Floating Points Are Sharper Than Expected

However, there was one big issue with this entire design: floating point errors. Due to minute differences in the way floating point numbers are calculated on different systems (due to different CPUs, code optimizations, quantum entanglement, etc), the collision code on each system acted slightly differently. Consequently, the two servers running in lockstep weren’t actually in sync. This was causing all sorts of issues – slight timing differences on enemy deaths caused discrepencies in scores and energy amounts…and the real kicker is that it was possible that two objects would collide on one system and never collide at all on the other simulation (a grazing collision on one might not trigger as a collision on the other system). Added bonus when an enemy died on one server just after shooting a ton of bullets, but just before shooting them on the other server. Suddenly, one player’s screen would be full of enemy bullets, and the other’s would be clear as the summer sky.

This became a real problem, and it took a while to solve it (again, perfectionism and networking don’t mix)…and next time, I’ll present the solution.

I haven’t had much development time in the last (nearly two) month(s), but I have had time to nearly finish up the networking code for the game, so I thought I would describe some of the work that went into the design of the system.

Resources

I tried to find some references on how most shmups (shoot-em-ups) such as my current game handle their networking, but I didn’t really find anything. There are a lot of resources on how RTS games do it (such as this great article about Age of Empires’ networking), and even more articles about how to write networking for an FPS game (the best of which, in my opinion, are the articles about the Source engine’s networking and the Unreal engine’s networking). There’s also a great series of articles on the Gaffer on Games site.

One of the most seemingly-relevant things to my game that I read was that, in current system emulators (for instance, SNES emulators) that have network play, they work in lock step with each other…that is, each system sends the other system(s) its inputs, and when all inputs from all systems have arrived, they can tick the simulation forward. I did an early experiment to see how well this would work with my game, and it turns out, not so well – the latency introduced with even a moderate ping (100ms) is rather prohibitive. The player would press up on the gamepad and have to wait for the ship to start moving…and it would only get worse with higher latencies! Obviously, this wouldn’t work.

Since there were many articles about the FPS model of networking, I opted to use it as a starting point, as my game is also a fast-action game. First off, I decided to make a (partial) list of the advantages and disadvantages of my game type vs. the FPS model:

Advantages of Procyon vs. an FPS

Only two players are supported, which greatly simplifies the bandwidth restrictions and the communication setup.

There’s no need to support joining/quitting in the middle of a game (again, simplifying game communications and state management)

Since it’s cooperative, I’m not really worried about players cheating, so I can allow clients to be authoritative about their actions moreso than the average FPS can.

Players can’t collide with each other, so there’s no need to worry about such things (in fact, a player basically can’t affect the other player at all – a player can only affect enemies)

Enemies are deterministic in their movements – none of my enemy designs have movement variation based on the player’s actions, so collision vs. enemies in a given frame is always accurate (unless the enemy was dead “in the past” due to the actions of the other player, which is discussed later).

Disadvantages

In an FPS, the view is more limited – it’s rare that a player sees all of the action at once due to the limited field of view. However, in Procyon, everything that is happening in the game is completely visible on both screens, meaning the game generally has to be less lenient about discrepancies.

Most FPS games are “instant hit.” For instance, when you fire a pistol in a FPS game, there’s no visible bullet that streaks across the screen, so it’s easier to fudge the results on the server. In Procyon, however, all of the weapons are on-screen and visible, so fudging them in a minimally-noticeable way becomes very difficult.

In many FPS games (though not all), there are WAY less entities active at a time than there are in a shoot-em-up.

This falls into the realm of “not a lot of references for this type of game,” but many of the “cheats” employed by FPS game programmers are really well-known, but very domain-specific; with a shmup, I’d have to make up my own network fakery to hide latency.

Network Strategies

There are two main network strategies used in games (at least, in those that I researched): lockstep and client-server.

Lockstep keeps all systems involved perfectly in sync, though at the cost of making it more susceptible to latency, because the game doesn’t step until the other player’s inputs have traversed the network. Generally, every so often (say, every 1/30th of a second), the current input is polled and then sent across the network. Then, once both/all inputs have reached the local machine, it ticks the game. This is the type of networking used by emulators (out of necessity – there’s no reliable way to do any sort of prediction with an emulator anyway) and some RTS games (such as Age of Empires, linked above). RTS games can hide the latency a bit by causing all commands to execute with a bit of a delay. One interesting property of lockstep networking is that nothing ever has to round-trip across the network. That is, nothing that server B ever sends back relies on something that Server A sent previously. Because of this, the game’s latency is effectively halved, as the meaningful measurement is one-way latency, not a full there-and-back ping. Lockstep games are frequently done peer-to-peer: each system sends its inputs to each other system…there’s no central entity in charge of all communications.

Conversely, a client-server architecture (which is used by an overwhelming majority of FPS games) puts one system in charge and generally gets authority over the activities of all players. In this case, the client sends its information to the server (inputs, positions, etc), and the server sends back any corrections that need to be made (locations/motion of the other players, shots fired, things hit/killed, etc). The client can run ahead of the server based on a prediction model, where it gives its best guess as to where things are currently based on the last communication from the server. This makes game lag much less apparent (At least, in the player’s own movements), as the player’s inputs can be processed immediately on the local machine.

In the next installment, I’ll talk through Procyon’s initial network design, which is a bit of a hybrid between the client-server and lockstep architectures…stay tuned!

Level 1 is effectively complete (excluding some global gameplay and balance issues.)

Here’s a video:

Yay!

This will probably be the norm for a while, unless I find myself with an excess of time some night; there’ll probably just be some project updates for a few weeks.

Progress on finishing the first level is going good, all of the main enemies are implemented, the level 1 boss is designed (but not yet coded), and I’m on my way towards getting the level done by the end of the month.

(Screens and videos below the fold)

Enemies

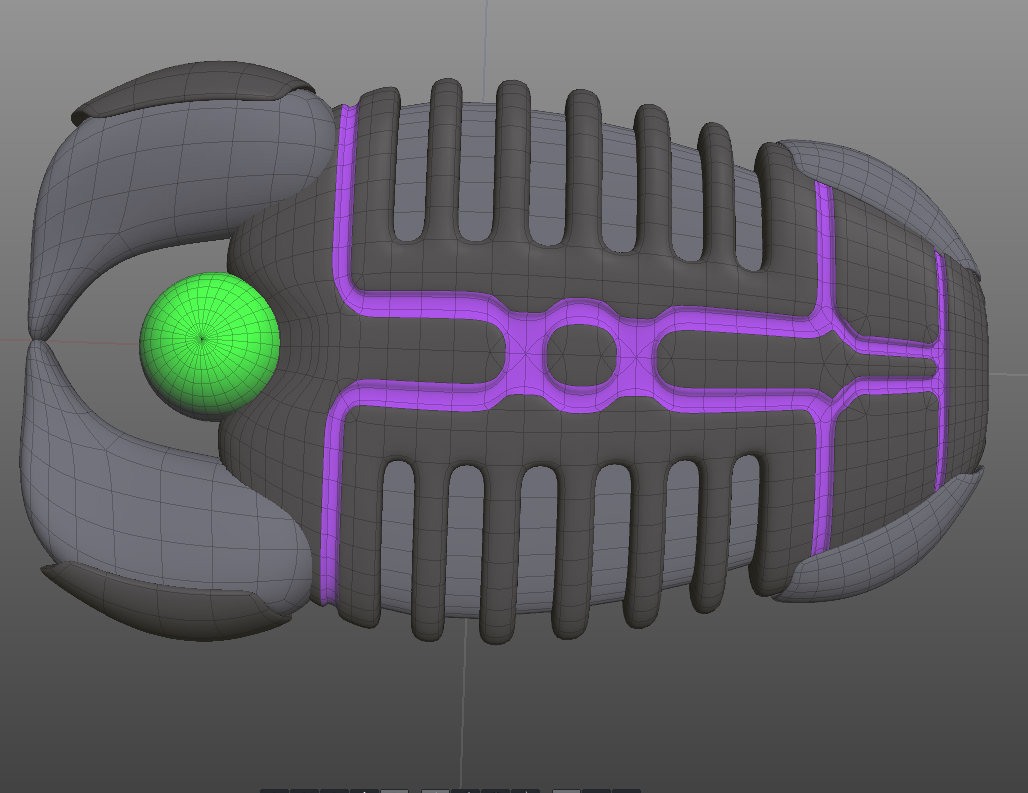

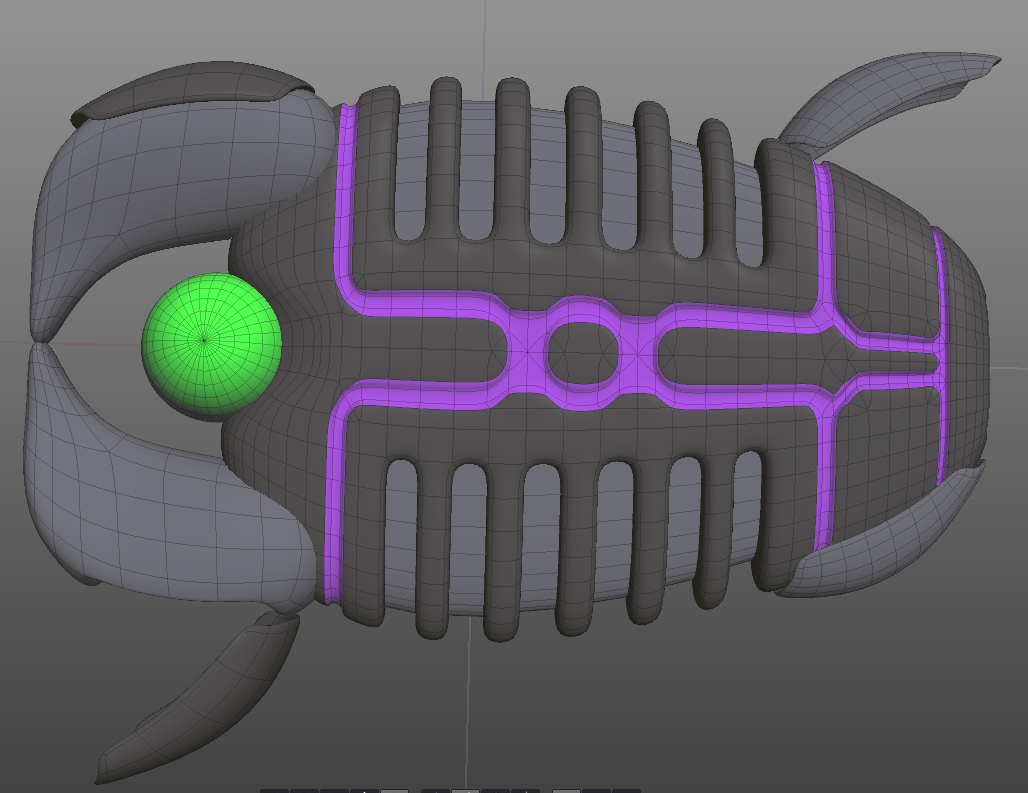

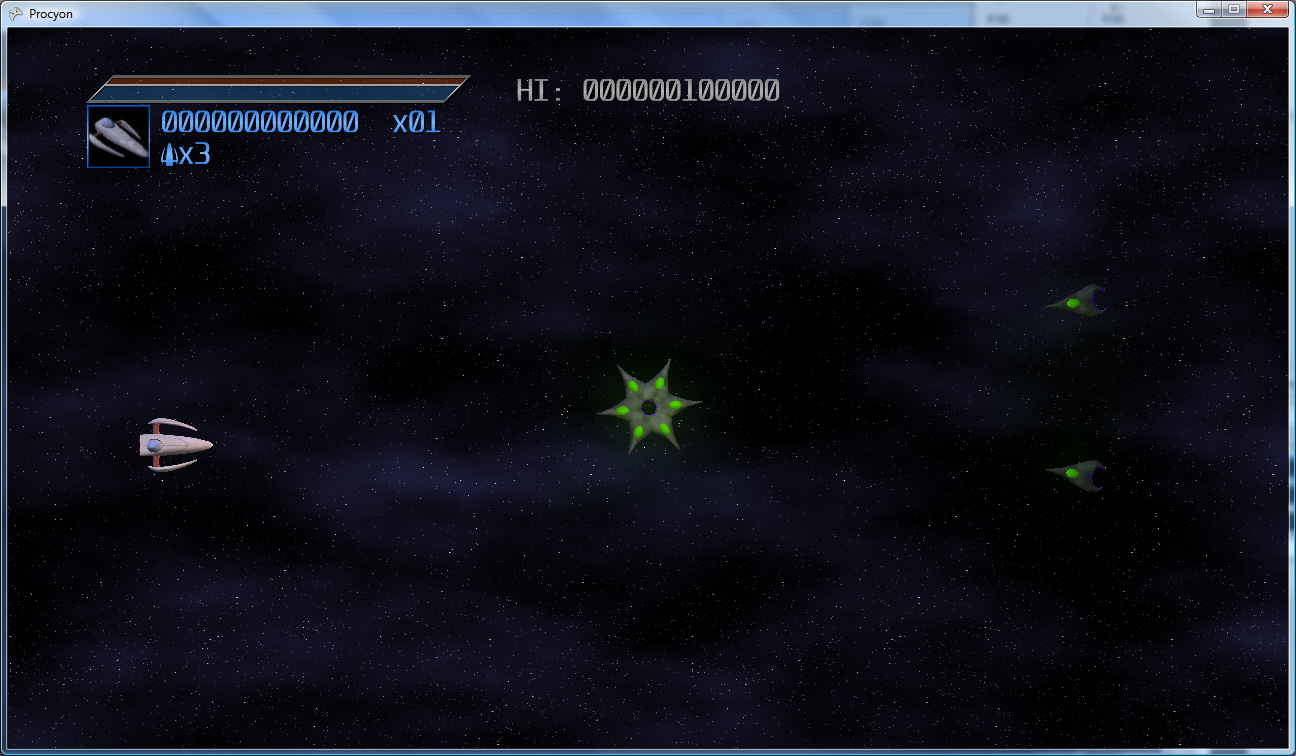

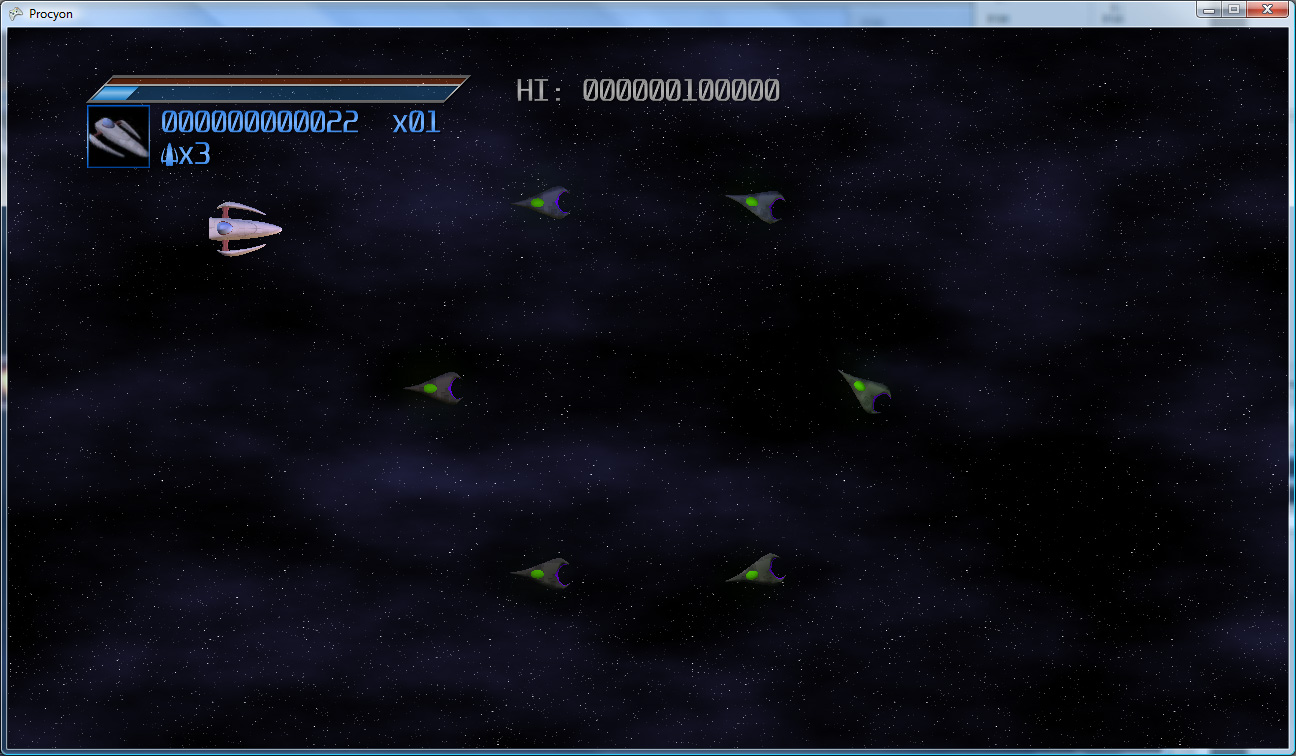

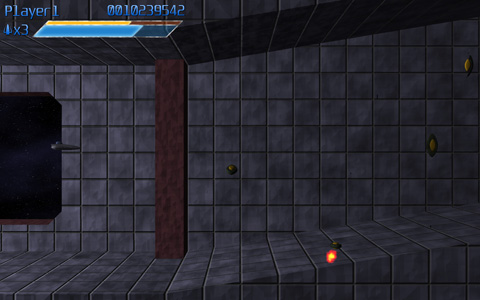

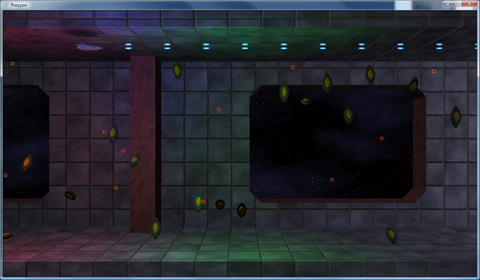

For the enemy designs, I looked to things like insects and aquatic creatures to get ideas for shapes and the like. Some screenshots!

And, a couple videos of the enemies in action:

Current Work

The next few items of work are:

- Finish the implementation of the boss of level 1

- Lay out the level

- Add the scrolling background

- Profit!

I haven’t made any specific posts about my game in a while, so I thought I’d just make a quick status update.

Code Progress

Progress is coming along quite nicely. Most of the game systems are done (though I have some modifications to make to enable things like curved enemy beam weapons and side-by-side ship paths).

- Enemies have multiple ways of spawning, and can follow paths (or not).

- All four weapons are implemented

- The player can now die (and respawn). Lives are tracked (but the game currently doesn’t game-over if the player dies, as that would be a pain for testing).

- The scoring system is in (with a first run at a score multiplier system).

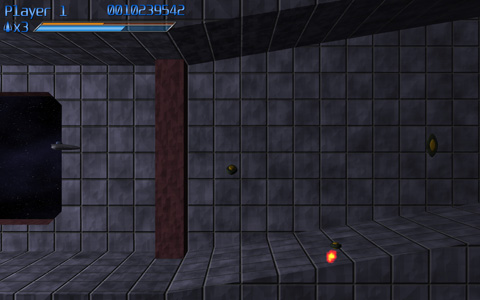

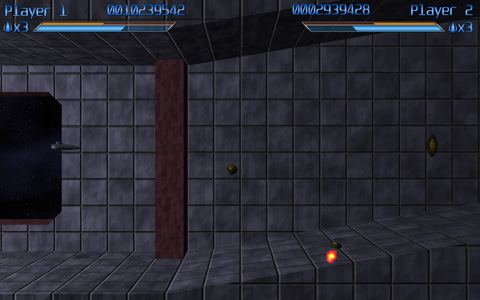

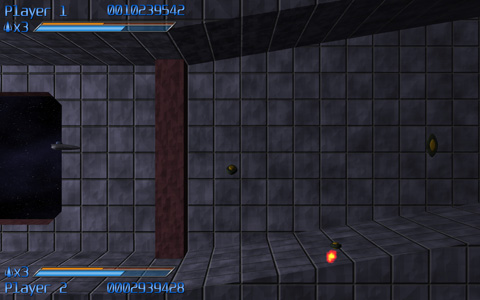

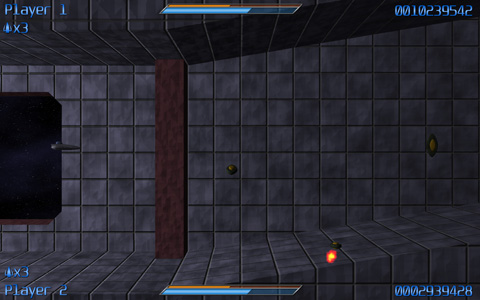

- The on-screen display has been completely redesigned.

- Two players can play at once, which includes a special combination lightning attack that is even more devastating than the standard lightning attack.

(Screens and videos below the fold)

Here is a video of a lot of those systems in action:

Art Work

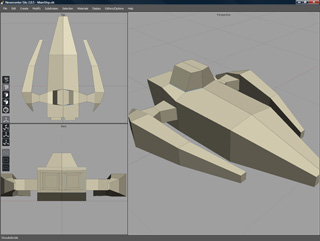

For the last few evenings, I’ve been working on designs for the enemy ships (though I’ll note that the background is still very much throwaway temp art). I plan to have about 5 or 6 types of ships in the first level of the game (not counting the boss) – and the goal is to have at least 2 new types in each successive level of the game.

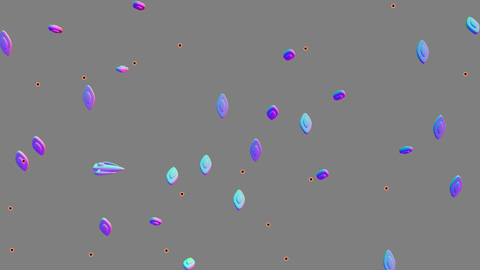

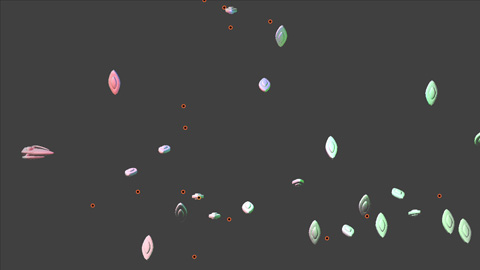

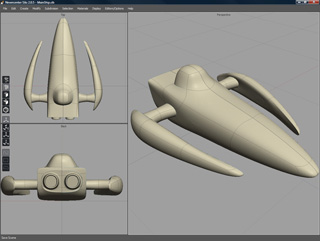

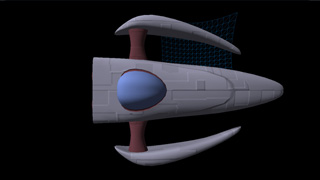

Here are a few of the current designs:

The goal is to make the ships look fairly organic – I’m completely avoiding hard edges (except for claw-like points), and trying to make them as smooth and rounded as possible. Thanks to Silo and its subdivision surfaces, this is actually pretty easy 🙂 The gray/green/purple combination feels, to me, rather otherworldly, and it’s less “standard” than the traditional black and red alien ship motif (i.e. Cylon ships). The color scheme will pose some interesting problems when it comes time to make levels inside of alien locations though – I’ll need a way to make the alien ships stand out from the background, while simultaneously make the background feel like it belongs to the same creatures that are piloting those ships.

Goals

I’m aiming to have two full levels of the game done by the end of next month, which should give me adequate time to get everything that needs to be implemented for them implemented, and polish them to a nice shine. There’s still quite a bit to do:

- As stated above, I want to allow curved beam weapons from the enemies (the player’s curved beam weapon is currently a special case)

- Also stated above, I want to be able to have enemies spawn side-by-side along paths (so that I can easily make them fly in in formations instead of just one at a time)

- There’s no sound/music code yet, though with XNA that’s pretty straightforward.

- Controller rumble!

- I’m sure there’s some code that I’ll need to add to enable giant boss fights.

- Not to mention the additional art/sound/music work to make it all shine.

It’s going to be an interesting month and a half. Off I go, I’ve got work to do!

The .NET framework’s reflection setup can be amazingly useful. At a basic level, it allows you to get just about any information you could want about any type, method, assembly, etc that you could want. In addition, you can programmatically access type constructors and methods, and invoke them directly, allowing you to do all sorts of neat stuff.

One useful application of this is in the creation of state machines. Imagine an entity in a game that flies around in a pattern for a bit, then stops to shoot some bullets, then returns to flying. Such an entity would have two states, “Flying” and “Shooting.”

A First Attempt

You might decide to create a state machine that could handle this entity, but also be usable for all entities that need a state structure. What should a state machine like that need to be able to do?

One such set of possibilities is as follows:

- Be able to assign a state based on a string state name.

- Each state has three functions:

- Begin – This function is called whenever the state is transitioned into, allowing it to initialize anything that needs it.

- Tick – This is the meat of a state, it is called once per game tick while the state is active.

- End – This is called when the state is transitioned out of, allowing any clean up. Note that this is called before the next state’s Begin function is called.

- However, a state shouldn’t have to specify functions that it doesn’t need. If a state has no need for the Begin function, it doesn’t need to implement it.

- If the state changes during a Tick call, the new state will tick as soon as it happens. This allows many states to fall through in rapid succession. For instance, a player character in a 2D Mario-like puzzle might be in the middle of the “Jumping” state, while holding right on the controller. When the character hits the ground, it would transition into the “Standing” state, but from there, it would then transition into “Walking” because the player is holding right. That way, the character would land and immediately start walking, which is what one would expect when playing the game.

So what would a first draft version of such a machine need to look like? First, we’d need a place to store delegates to the three functions needed for a state:

public delegate void StateDelegate();

class StateInfo

{

public StateDelegate OnBegin { get; set; }

public StateDelegate OnTick { get; set; }

public StateDelegate OnEnd { get; set; }

public void Begin()

{

if (OnBegin != null)

OnBegin();

}

public void Tick()

{

if (OnTick != null)

OnTick();

}

public void End()

{

if (OnEnd != null)

OnEnd();

}

public StateInfo()

{

OnBegin = null;

OnTick = null;

OnEnd = null;

}

}

Given that, we can now store them in a dictionary, indexed by by the state’s name (a string). To initialize it, we would just call AddState with the state name and three delegates (which may be null, to specify that a given function is unnecessary), and it would add that state to the list. We also need to be able to Tick the state machine and change the state. The whole contraption would look something like the following:

public class StateMachine

{

Dictionary states = new Dictionary();

bool ticking = false;

StateInfo currentStateInfo = null;

string currentStateName = null;

public void AddState(string stateName, StateDelegate begin, StateDelegate tick, StateDelegate end)

{

StateInfo info = new StateInfo();

info.OnBegin = begin;

info.OnTick = tick;

info.OnEnd = end;

states.Add(stateName, info);

if(currentStateName == null)

State = stateName;

}

public string State

{

get { return currentStateName; }

set

{

if (currentStateName != null)

currentStateInfo.End();

currentStateName = value;

currentStateInfo = states[currentStateName];

currentStateInfo.Begin();

if (ticking)

currentStateInfo.Tick();

}

}

public void Tick()

{

ticking = true;

currentStateInfo.Tick();

ticking = false;

}

}

As you can see, it’s fairly straightforward. An entity can call AddState (probably during its constructor) on the state machine to add a new state. It can assign a new state by using State = “newState”, which will end the current state and begin the new state. Finally, the entity just needs to call Tick to run the current state.

In practice, this might look something like:

public class FlyShooter : EntityBase

{

StateMachine machine = new StateMachine();

public FlyShooter()

{

machine.AddState("Flying", FlyingBegin, FlyingTick, null);

machine.AddState("Shooting", null, ShootingTick, null);

}

void FlyingBegin()

{

}

void FlyingTick()

{

if( IsTimeToShoot )

{

machine.State = "Shooting";

return;

}

}

void ShootingTick()

{

if( DoneShooting )

{

machine.State = "Flying";

return;

}

}

public override void Tick()

{

machine.Tick();

}

}

There’s not much to it.

One thing to note is that the way that the state fall-through works (that is, changing state in the middle of another state’s Tick function) will still cause the rest of the current function to execute (after it’s already partially run another state), so it’s best to either change states at the very end of the function or to return after setting the new state, as is done above).

Though this works pretty well as-is, say you want to add new states to an entity afterwards. What if the enemy now needs a special “Dying” state. Not only do you have to add new functions (DyingBegin, DyingTick, and DyingEnd), you also have to remember to call AddState at object startup. Wouldn’t it be nice if you could just add the new functions and it would just work? As it turns out, using .NET’s reflection functionality, you can do exactly that!

Mirror, Mirror On the Wall, What Are the States That I Can Call?

There are a number of ways to implement a state machine system using reflection. The one that was settled upon for Procyon was the design with the least redundancy (that is, “don’t repeat yourself”). State names only appear once in a given entity’s codebase (excepting when states are set), and it takes a small number of characters, right where the state code is defined, to mark a state. At the point that it’s added to the code, it’s already ready to use, there’s no additional list of states to update.

Most reflection code works by looking for .NET Attributes. Attributes are class objects that can be added as metadata to a class, a method, a property, or any number of other pieces of your code. Also, you can create your own attributes – program-specific metadata that you can later find.

In the case of the state machine, a very simple attribute is needed.

using System.Reflection;

[AttributeUsage(AttributeTargets.Method)]

public class State : Attribute

{

}

It doesn’t need any sort of custom data, so there’s not much to it. The AttributeUsage at the top (which is, itself, an attribute) specifies that the “State” attribute being defined can only be applied to methods. Attempting to add it to anything else (say, a class) would generate a compiler error.

With this attribute, you’ll be able to mark methods as states. In this case, each method will take a StateInfo as a parameter (meaning that the StateInfo class, which was described above, has to be a public class now). The method will then fill in the state information with delegates. The most elegant way to handle this is using anonymous delegates. Here’s that enemy class again, using the new method:

public class FlyShooter : EntityBase

{

StateMachine machine;

public FlyShooter()

{

machine = new StateMachine(this, "Flying");

}

[State]

void Flying(StateInfo info)

{

info.OnBegin = delegate()

{

};

info.OnTick = delegate()

{

if (IsTimeToShoot)

{

machine.State = "Shooting";

return;

}

};

}

[State]

void Shooting(StateInfo info)

{

info.OnTick = delegate()

{

if (DoneShooting)

{

machine.State = "Flying";

return;

}

};

}

public override void Tick()

{

machine.Tick();

}

}

As you can see, the [State] attribute is there. Each of the (up to) three functions for a state are initialized inside of the state function, cleanly grouping the three functions together into one unit. The state function’s name is the state’s name, so it’s only specified once. And, of course, there’s no manual list manipulation; to add a new state, simply add the code for the new state and make sure that it’s marked by a [State] attribute…the runtime will take care of the rest.

To get this whole thing to work, though, there’ll obviously have to be some code to find methods using it. When creating your state machine, you’ll now want to pass in a pointer to the object that the state machine belongs to. From that, it will be able to get the type of object for which you’re creating the state machine. Automatically adding states that are marked with your state attribute goes something like this:

- Scan through each method in the type of the object you passed in (if you want to be able to grab private methods from parent classes of the current object’s type, you’ll need to scan through the classes in the heirarchy one by one)

- If it doesn’t have the State attribute, continue on to the next method.

- If it does have the attribute, get the name of the method (this becomes the state’s name).

- Once you have the name of the method, call the method and let it populate a StateInfo that you hand to it.

- Once that’s done, add the method into the state dictionary, using the state method’s name as the key (and thus, the state name).

The AddState function can go away – it’s no longer necessary, as the state list is now update completely automatically. The StateMachine class’ constructor needs to be updated a bit, to add all of this reflection magic into it. It will now look something like this:

public StateMachine(object param, string initialState)

{

Type paramType = param.GetType();

for (Type currentType = paramType; currentType != typeof(object); currentType = currentType.BaseType)

{

foreach (var method in currentType.GetMethods(BindingFlags.Instance | BindingFlags.DeclaredOnly |

BindingFlags.NonPublic | BindingFlags.Public))

{

object[] attributes = method.GetCustomAttributes(typeof(State), true);

if (attributes.Length == 0)

continue;

string methodName = method.Name;

if (states.ContainsKey(methodName))

continue;

StateInfo info = new StateInfo();

method.Invoke(param, new object[] { info });

states.Add(methodName, info);

}

}

if (initialState != null)

State = initialState;

}

None of the rest of the state machine class’ code needs to change; it all works exactly as it did before. The new constructor means that the list gets created at state machine creation time, but the rest of the internal data remains exactly the same.

Possible Improvements

It works pretty well as-is, but there are ways it could be improved. For instance:

- You could add a type of “Sleep” method to the state machine, to make it delay a certain number of ticks before continuing to tick the current state

- You could add a list of transitions to the StateInfo, so that all transitions to different states can be declared up-front, in an easy-to-read manner. That removes that extra state-setting clutter from your tick routine (and even means that a state may not need a Tick routine at all!)

- If you need multiple sets of states for objects (say the player needs a collection of states for movement and a separate collection of states for weapons fire), you could modify the State attribute to provide a collection name (like [State(“Movement”)]), and specify a state collection when creating your state machine.

This is a small list of possible changes. If you have other ideas, let me know!

You’re flying your ship down a cavern, dodging and weaving through enemy fire. It’s becoming rapidly apparent, however, that you’re outmatched. So, desperate to survive, you flip The Switch. Yes, that switch. The one that you reserve for those…special occasions. Your ship charges up and releases bolt after deadly bolt of lightning into your opponents, devastating the entire enemy fleet.

At least, that’s the plan.

But how do you, the game developer, RENDER such an effect?

Lightning Is Fractally Right

As it turns out, generating lightning between two endpoints can be a deceptively simple thing to generate. It can be generated as an L-System (with some randomization per generation). Some simple pseudo-code follows: (note that this code, and really everything in this article, is geared towards generating 2D bolts; in general, that’s all you should need…in 3D, simply generate a bolt such that it’s offset relative to the camera’s view plane. Or you can do the offsets in the full three dimensions, it’s your choice)

segmentList.Add(new Segment(startPoint, endPoint));

offsetAmount = maximumOffset; // the maximum amount to offset a lightning vertex.

for each generation (some number of generations)

for each segment that was in segmentList when this generation started

segmentList.Remove(segment); // This segment is no longer necessary.

midPoint = Average(startpoint, endPoint);

// Offset the midpoint by a random amount along the normal.

midPoint += Perpendicular(Normalize(endPoint-startPoint))*RandomFloat(-offsetAmount,offsetAmount);

// Create two new segments that span from the start point to the end point,

// but with the new (randomly-offset) midpoint.

segmentList.Add(new Segment(startPoint, midPoint));

segmentList.Add(new Segment(midPoint, endPoint));

end for

offsetAmount /= 2; // Each subsequent generation offsets at max half as much as the generation before.

end for

Essentially, on each generation, subdivide each line segment into two, and offset the new point a little bit. Each generation has half of the offset that the previous had.

So, for 5 generations, you would get:

That’s not bad. Already, it looks at least kinda like lightning. It has about the right shape. However, lightning frequently has branches: offshoots that go off in other directions.

To do this, occasionally when you split a bolt segment, instead of just adding two segments (one for each side of the split), you actually add three. The third segment just continues in roughly the first segment’s direction (with some randomization thrown in)

direction = midPoint - startPoint;

splitEnd = Rotate(direction, randomSmallAngle)*lengthScale + midPoint; // lengthScale is, for best results, < 1. 0.7 is a good value.

segmentList.Add(new Segment(midPoint, splitEnd));

Then, in subsequent generations, this, too, will get divided. It’s also a good idea to make these splits dimmer. Only the main lightning bolt should look fully-bright, as it’s the only one that actually connects to the target.

Using the same divisions as above (and using every other division), it looks like this:

Now that looks a little more like lightning! Well…at least the shape of it. But what about the rest?

Adding Some Glow

Initially, the system designed for Procyon used rounded beams. Each segment of the lightning bolt was rendered using three quads, each with a glow texture applied (to make it look like a rounded-off line). The rounded edges overlapped, creating joints. This looked pretty good:

…but as you can see, it tended to get quite bright. It only got brighter, too, as the bolt got smaller (and the overlaps got closer together). Trying to draw it dimmer presented additional problems: the overlaps became suddenly VERY noticeable, as little dots along the length of the bolt. Obviously, this just wouldn’t do. If you have the luxury of rendering the lightning to an offscreen buffer, you can render the bolts using max blending (D3DBLENDOP_MAX) to the offscreen buffer, then just blend that onto the main scene to avoid this problem. If you don’t have this luxury, you can create a vertex strip out of the lightning bolt by creating two vertices for each generated lighting point, and moving each of them along the 2D vertex normals (normals are perpendicular to the average of the directions two line segments that meet at the current vertex).

That is, you get something like this:

Animation

This is the fun part. How do you animate such a beast?

As with many things in computer graphics, it requires a lot of tweaking. What I found to be useful is as follows:

Each bolt is actually TWO bolts at a time. In this case every 1/3rd of a second, one of the bolts expires, but each bolt’s cycle is 1/6th of a second off. That is, at 60 frames per second:

- Frame 0: Bolt1 generated at full brightness

- Frame 10: Bolt1 is now at half brightness, Bolt2 is generated at full brightness

- Frame 20: A new Bolt1 is generated at full, Bolt2 is now at half brightness

- Frame 30: A new Bolt2 is generated at full, Bolt1 is now at half brightness

- Frame 40: A new Bolt1 is generated at full, Bolt2 is now at half brightness

- Etc…

Basically, they alternate. Of course, just having static bolts fading out doesn’t work very well, so every frame it can be useful to jitter each point just a tiny bit (it looks fairly cool to jitter the split endpoints even more than that, it makes the whole thing look more dynamic). This gives:

And, of course, you can move the endpoints around…say, if you happen to have your lightning targetting some moving enemies:

So that’s it! Lightning isn’t terribly difficult to render, and it can look super-cool when it’s all complete.

I have been hard at work on my game (in my ridiculously limited spare time) for the last month and a half. One major hurdle that I’ve had to overcome was collision detection code. Specifically, my collision detection performed great on my PC, but when running it on the Xbox 360, everything would slow to a crawl (in certain situations).

The types of collision detection I have to deal with are varied, due to the weird way that I handle certain classes of obstacle (like walls):

- Player bullets vs. Enemy – Player bullets are, for simplicity, treated as spheres, so sphere/mesh testing works here.

- Enemy bullets vs. Player – Same as above.

- Player vs. Wall – Because the game’s playing field is 2D, the walls in-game are treated as 2D polygons, so it boils down to a 2D mesh vs. polygon test.

- Player vs. Enemy – Mesh vs. Mesh here

- Beam vs. Enemy – The player has a bendy beam weapon. I divide the curved beam up into line segments, and do ray/mesh tests.

The worst performance offender was, surprisingly, the sphere vs. mesh test, which will be the subject of this article. Before optimizing, when I’d shoot a ton of bullets in a certain set of circumstances, the framerate would drop well into the single digits, because the bullet vs. mesh (sphere vs. mesh) collision couldn’t keep up. Here are the things that I changed to get this test working much, much faster.

When using Value Types, Consider Passing As References

One thing that was slowing my code down was all of the value type copying that my code was doing. Take the following function:

public static bool SphereVsSphere(Vector3 centerA, float radiusA, Vector3 centerB, float radiusB)

{

float dist = radiusA+radiusB;

Vector3 diff = centerB-centerA;

return diff.LengthSquared() < dist\*dist;

}

Simple, yes? This function, however, falls prey to reference type copying. You see, “centerA” and “centerB” are both passed in by value, which means that a copy of the data is made. It’s not an issue when done infrequently, but with the number of SphereVsSphere calls that were happening during a given frame, the copies really started to add up.

There’s also a hidden set of copies: the line “Vector3 diff = centerB-centerA” also contains a copy, as it passes centerB and centerA into the Vector3 subtraction operator overload, and they get passed in by value. Also, a new Vector3 gets created inside of the operator then returned, which, I believe, also copies the data into diff.

To eliminate these issues, you should pass all of your non-basic value types (that is, anything that’s not an int, bool, float, anything like that) by reference instead of by value. This eliminates all of the excess copies. It does come at a price, though: in my opinion, it does make the code considerably uglier.

Here’s the updated routine:

public static bool SphereVsSphere(ref Vector3 centerA, float radiusA, ref Vector3 centerB, float radiusB)

{

Vector3 diff;

Vector3.Subtract(ref centerA, ref centerB, out diff);

float dist = radiusA+radiusB;

return diff.LengthSquared() < dist\*dist;

}

Instead of having a nice-looking overloaded subtraction, now there’s a call to Vector3.Subtract. While it’s not so bad in the case of a simple subtraction, when you have a more complicated equation, they pile up pretty quickly. However, given the speed boost just making this change can give you, it’s totally worth it.

Use Hierarchical Collision Detection (But Use a Good Bounding Volume)

Heirarchical collision detection is a good thing.

For those of you that DON’T know, basically instead of testing your collider against every triangle in a mesh, you have a tree in which each node has a bounding volume, and the leaves contain the actual triangles. The idea is that, by doing a much simpler collider vs. bounding volume test, you can elminiate large amoungs of triangles before you ever have to test them.

In this case, I was using a sphere tree, where each node in the tree has a bounding sphere, and the leaves of the tree contain actual mesh triangles. I used spheres instead of AABBs (Axis-aligned bounding boxes) because transforming AABBs is expensive (and they become Oriented bounding boxes after the transform). Transforming a sphere is easy, however. None of my object transforms have scale data, so it’s a simple matter of transforming the sphere’s center.

However, the use of bounding spheres has a dark side. Unless all of your heirarchy levels is roughly sphere-shaped, a sphere is a terribly inefficient bounding volume. They’re usually much larger than the geometry that they contain, so there are more recursions into lower levels of the tree (think of there as being more dead space around the geometry when using spheres than bounding boxes).

By also adding bounding boxes to the data, I could use them where I’m not having to transform them. For instance, because this is sphere vs. mesh, and the entire mesh is rigid, I can take the mesh’s world 4×4 matrix, and transform the sphere by the INVERSE of it. This way, the sphere is in model space, and I can use the bounding volumes without having to do any transformations at lower levels.

But now I needed a sphere vs. AABB test. However, I didn’t much care if it was exact or not, so instead I used a simple test where I expand the box by the radius of the sphere, then test whether the sphere is inside of the box or not. Near the corners (surely this is where the term “corner case” comes from), this can give false positives, but it will never say the sphere DOESN’T intersect the box when it should say it does. This is an acceptable trade-off.

public static bool SphereVsAABBApproximate(ref Vector3 sphereCenter, float sphereRadius, ref Vector3 boxCenter, ref Vector3 boxExtent)

{

Vector3 relativeSphereCenter;

Vector3.Subtract(ref sphereCenter, ref boxCenter, out relativeSphereCenter);

Vector3Helper.Abs(ref relativeSphereCenter);

return (relativeSphereCenter.X <= boxExtent.X+sphereRadius && relativeSphereCenter.Y <= boxExtent.Y+sphereRadius && relativeSphereCenter.Z <= boxExtent.Z+sphereRadius);

}

Simple, but effective. Converting from using a sphere bounding volume to AABBs cut down the number of recursions (and triangle comparisons) being done dramatically, since the AABBs are a much tighter fit to the geometry.

Recursion Is Weird

One suggestion I got, also, was to eliminate recursion. The heirarchical nature of the algorithm meant that my test was recursive. Here was the test as originally written:

public static bool SphereVsAABBTree(ref Vector3 sphereCenter, float sphereRadius, CollisionTreeNode node)

{

if (!SphereVsAABBApproximate(ref sphereCenter, sphereRadius, ref node.BoxCenter, ref node.BoxExtent))

return false;

if (node.Left != null)

{

if(SphereVsAABBTree(ref sphereCenter, sphereRadius, node.Left))

return true;

return SphereVsAABBTree(ref sphereCenter, sphereRadius, node.Right);

}

for(int i = 0; i < node.Indices.Length; i+=3)

{

if(SphereVsTriangle(ref sphereCenter, sphereRadius, ref node.Vertices\[node.Indices\[i+0\]\], ref node.Vertices\[node.Indices\[i+1\]\], ref node.Vertices\[node.Indices\[i+2\]\]))

return true;

}

return false;

}

As you can see, it recurses into child nodes until it either gets a false test out of both of them, or it reaches triangles. But how do you eliminate recursion in a tree such as this? More specifically, how do you do it while using a constant (non-node-count dependent) amount of memory?

The trick is as follows: Assuming your nodes contain Parent pointers in addition to Left and Right pointers (where the Parent of the trunk of the tree is null), you can do it with no issue. You track the node that you’re currently visiting (“cur”), and the node that you previously visited (“prev”, initialized to null). When you reach a node, test as follows:

- if you came to it via its parent (that is, prev == cur.Parent), you’ve never visited it before.

- At this point, you should do your collision tests. It’s a newly-visited node.

- prev = cur

- cur = cur.Left — This is basically “recursing” into the Left node.

- If you arrived from its Left child, you visited it before and recursed into its left side

- Since this node has already been visited, do not do the collision tests. They’ve already been shown to be successful.

- prev = cur

- cur = cur.Right — Since we recursed into Left last time we were here, recurse into Right this time.

- If you arrived via its Right child, you’ve visited both of its children, so you are done with this node.

- Again, this node has already been visited, so do not run the collision tests.

- prev = cur

- cur = cur.Parent — We’re done with this node, so go back to its parent.

- When doing the collision tests, if a Sphere vs. AABB bounding volume test ever fails, we don’t have to “recurse” so go back to its parent.

- prev = cur

- cur = cur.Parent

- Finally, if you do a Sphere vs. Triangle collision test and it succeeds, we can immediately return, as we have a guaranteed collision, and no more triangles or nodes need to be tested.

Doing all of this makes the routine bigger, but no recursion is necessary, so there’s no additional stack space generated per node visited (and no function call overhead, either). The finished code is as follows (note that I also added a quick sphere vs. sphere test right at the outset, because it’s a very quick early out if the sphere is nowhere near the mesh):

public static bool SphereVsAABBTree(ref Vector3 sphereCenter, float sphereRadius, CollisionTreeNode node)

{

CollisionTreeNode prev = null, cur = node;

if (!SphereVsSphere(ref sphereCenter, sphereRadius, ref node.Sphere.Center, node.Sphere.Radius))

return false;

while(cur != null)

{

if(prev == cur.Parent)

{

if (!SphereVsAABBApproximate(ref sphereCenter, sphereRadius, ref cur.BoxCenter, ref cur.BoxExtent))

{

prev = cur;

cur = cur.Parent;

continue;

}

for(int i = 0; i < cur.Indices.Length; i+=3)

{

if(SphereVsTriangle(ref sphereCenter, sphereRadius, ref cur.Vertices\[cur.Indices\[i+0\]\], ref cur.Vertices\[cur.Indices\[i+1\]\], ref cur.Vertices\[cur.Indices\[i+2\]\]))

return true;

}

}

if (cur.Left != null)

{

if (prev == cur.Parent)

{

prev = cur;

cur = cur.Left;

continue;

}

if (prev == cur.Left)

{

prev = cur;

cur = cur.Right;

continue;

}

}

prev = cur;

cur = cur.Parent;

}

return false;

}

Mission Complete

After making that set of changes, the sphere vs. mesh tests no longer bog down on the Xbox, even in a degenerate case such as when there are tens or hundreds of bullets well inside of the mesh’s area.

Getting the sphere vs. mesh test working was a great accomplishment, but as much as I thought it was already working well, it turns out that mesh vs. mesh testing was a much bigger problem. However, that’s another story for another day.

Okay, I’m back! Sorry for the delay, my job got super-crazy there for a month or so. It hasn’t really let up too much, but it’s enough that I was able to get a little bit done. However, nothing really to show for it, I’m afraid.

But, I do have SOMETHING interesting: a look into the HUD design process. This work was done almost a month ago, but I haven’t had time to even sit down and write this entry until now.

Necessary elements

There are a few elements that are necessary on the in-game HUD:

- Player name – Especially important in two-player mode, having both players’ names on-screen will help to differentiate which statistics belong to which player

- Lives – Also very important is the number of lives that a player has.

- Score – Points. Very important.

- Weapon Charge – You’ll acquire weapons charge throughout the course of the game, which you’ll be able to spend to temporarily upgrade your weapons. This meter will show you how much charge you have. I chose to represent this with a blue bar.

- Secret Charge – I’m not quite ready to divulge this little gem, but this meter only fills up when the blue (weapon charge) meter is completely full. I chose yellow for this one.

(Mockups of the design process below the fold)

Mockups!

I quickly made a mockup of my initial idea for the hud.

The first suggested modification was to swap the two meters vertically. Because the yellow bar only fills up when the blue meter is full, it would be analogous to pouring in water (filling from the bottom up). I liked this concept, so I swapped the bars (I wanted to get just one readout set up, so I took out Player 2 for the next while):

At that point, I didn’t really feel that the look was consistent. The text didn’t match the bars, and I didn’t like the look of the gradient on the text. So I reworked both so that they had bright centers fading to darker colors at the top and bottom extremeties, which really unified the look of the various elements

At this point, it was noted that the bar looked kind of stupid relative to the text, since it’s so much larger. So the scale of the bars was modified to match the height of the text. This also allowed a little bit of the height to be taken out of the HUD.

At this point, I was happy with the layout, so I set out to figure out how to put the second player in. Initially I had two options:

The first one is sort of the “classic” two player layout. The second was optimized so that there would be a minimum of data sitting over where the enemies are coming from (potentially obscuring relevant enemy activity). However, everyone that I talked to (including myself, though I promise that dialogue was mostly internal) thought that option B was a pretty terrible-looking layout, so I scrapped it entirely…but I wasn’t entirely happy with the first option, either.

So I started to play around some more.