(originally posted over at Cohost)

I was writing about how to parse C++17-style hex floating point literals, and in doing so I ended up writing a bunch about how floats work in general (and specifically how floating point rounding happens), so I opted to split it off from that post into its own, since it’s already probably way too many words as it is ?

Here we go!

How Do Floats Work

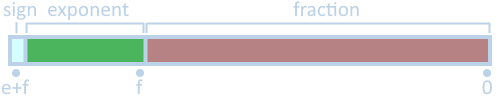

If you don’t know, a floating-point number (At least, an IEEE 754 float, which effectively all modern hardware supports), consists of three parts:

- Sign bit – the upper bit of the float is the sign bit: 1 if the float is negative, 0 if it’s positive.

- Exponent – the next few bits (8 bits for a 32-bit float, 11 bits for a 64-bit float) contain the exponent data, which is the power of two to multiply the given hex value with. (Note that the exponent is stored in a biased way – more on that in a moment)

- Mantissa – the remaining bits (23 for 32-bit float, 52 for a 64-bit float) represent the fractional part of the float’s value.

In general (with the exception of subnormal floats and 0.0, explained in a bit) there is an implied 1 in the float: that is, if the mantissa has a value of “FC3CA0000” the actual float is 1.FC3CA0000 (the mantissa bits are all to the right of the decimal point) before the exponent is applied. Having this implied 1 gives an extra bit of precision to the value since you don’t even have to store that extra 1 bit anywhere – it’s implied! Clever.

The exponent represents the power of two involved (Pow2(exponent)), which has the nice property that multiplies or divides of a float by powers of two do not (usually, except at extremes) affect the precision of the number, dividing by 2 simply decrements the exponent by 1, and multiplying by 2 increments the exponent by 1.

For a double-precision (64-bit) float, the maximum representable exponent is 1023 and the minimum is -1022. These are stored in 11 bits, and they’re biased (which is to say that the stored 11 bits is actualExponent + bias where the bias is 1023. That means that this range of [-1022, 1023] is actually stored as [1, 2046] (00000000001 and 11111111110 in binary). This range uses all but two of the possible 11-bit values, which are used to represent two sets of special cases:

- Exponent value

00000000000brepresents a subnormal float – that is, it still has the effective exponent of -1022 (the minimum representable exponent) but it does NOT have the implied 1 – values smaller than this start to lose bits of precision for every division by 2 as it can’t decrement the exponent any farther and so ends up sliding the mantissa to the right instead.- For this

00000000000bexponent, if the mantissa is 0, then you have a value of 0.0 (or, in a quirk of floating point math, -0.0 if the sign bit is set).

- For this

- Exponent value

11111111111brepresents one of two things:- If the mantissa is zero, this is infinity (either positive or negative infinity depending on the sign bit).

- If the mantissa is non-zero, it’s NaN (not a number).

- (There are two types of NaN, quiet and signaling. Those are a subject for another time, but the difference bit-wise is whether the upper bit of the mantissa is set: if 1 it’s quiet, if 0 then it’s signalling).

If you wanted to write a bit of math to calculate the value of a 64-bit float (ignoring the two special exponent cases) it would look something like this (where bias in this case is 1023):

(signBit ? -1 : 1)

* (1 + (mantissaBits / Pow2(52 + 1))

* Pow2(exponentBits - bias)Standard Float Rounding

Okay, knowing how floats are stored, clearly math in the computer isn’t done with infinite precision, so when you do an operation that drops some precision, how does the result get rounded?

When operations are done with values with mismatched exponents, the value with the lowest exponent is effectively shifted to the right by the difference to match the exponents.

For example, here’s the subtraction of two four-bit-significand (3 bits of mantissa plus the implied 1) floats:

1.000 * 2^5

- 1.001 * 2^1The number being subtracted has the smaller exponent, so we end up shifting it to the right to compensate (for now, doing it as if we had no limit on extra digits):

1.000 0000 * 2^5

- 0.000 1001 * 2^5 // Shifted right to match exponents

------------------

0.111 0111 * 2^5

1.110 111 * 2^4 // shifted left to normalize (fix the implied 1)

1.111 * 2^4 // round up since we had more than half off the edgeNote that in this example, the value being subtracted shifted completely off the side of the internal mantissa bit count. Since we can’t store infinite off-the-end digits, what do we do?

Float math uses three extra bits (to the “right” of the mantissa), called the guard bit, the round bit, and the sticky bit.

As the mantissa shifts off the end, it shifts into these bits. This works basically like a normal shift right, with the exception that the moment that ANY 1 bit get shifted into the sticky bit, it stays 1 from that point on (that’s what makes it sticky).

For instance:

G R S

1.001 0 0 0 * 2^1

0.100 1 0 0 * 2^2 // 1 shifts into the guard bit

0.010 0 1 0 * 2^3 // now into the round bit

0.001 0 0 1 * 2^4 // now into the sticky bit

0.000 1 0 1 * 2^5 // sticky bit stays 1 nowNote that the sticky bit stayed 1 on that last shift, even though in a standard right shift it would have gone off the end. Basically if you take the mantissa plus the 3 GRS bits (not to be confused with certain cough other meanings of GRS) and shift it to the right, the operation is the equivalent of:

mantissaAndGRS = (mantissaAndGRS >> 1) | (mantissaAndGRS & 1)Now when determining whether to round, you can take the 3 GRS bits and treat them as GRS/8 (i.e. GRS bits of 100b are the equivalent of 0.5 (4/8), and 101b is 0.625 (5/8)), and use that as the fraction that determines whether/how you round.

The standard float rounding mode is round-to-nearest, even-on-ties (that is, if it could round either way (think 1.5, which is equally close to either 1.0 or 2.0), you round to whichever of the neighboring values is even (so 1.5 and 2.5 would both round to 2).

Using our bits, the logic, then, is this:

- If the guard bit is not set, then it rounds down (fraction is < 0.5), mantissa doesn’t change.

- If the guard bit IS set:

- If round bit or sticky bit is set, always round up (fraction is > 0.5), mantissa increases by 1.

- Otherwise, it’s a tie (exactly 0.5, could round either way), so round such that the mantissa is even (the lower bit of the mantissa is 0), mantissa increments if the lower bit was 1 (to make it even).

Okay, so if all we care about is guard bit and then <round bit OR sticky bit>, why even have three bits? Isn’t two bits enough?

Nope! Well, sometimes, but not always. Turns out, some operations (like subtraction) can require a left shift by one to normalize the result (like in the above subtraction example), which means if you only had two bits of extra-mantissa information (just, say, a round and sticky bit) you’d be left with one bit of information after the left shift and have no idea if there’s a rounding tiebreaker.

For instance, here’s an operation with the proper full guard, round, and sticky bits:

1.000 * 2^5

- 1.101 * 2^1

// Shift into the GRS bits:

1.000 000 * 2^5

- 0.000 111 * 2^5 // sticky kept that lowest 1

------------------

0.111 001 * 2^5

1.110 01 * 2^4 // shift left 1, still 2 digits left

1.110 * 2^5 // Round down properlyIf this were done with only two bits (round and sticky) we would end up with the following:

1.000 * 2^5

- 1.101 * 2^1

// Shift into just RS bits:

1.000 00 * 2^5

- 0.000 11 * 2^5

------------------

0.111 01 * 2^5

1.110 1 * 2^4 // shift left 1, still 2 digits leftOnce we shift left there, we only have one bit of data, and it’s set. We don’t know whether or not we had a fraction > 0.5 (meaning we have to round up) or < 0.5 (meaning we round to even, which is down in this case).

So the short answer is: three bits because sometimes we have to shift left and lose one bit of the information, and we need at least a “half bit” and a “tiebreaker bit” at all times. Given all the float operations, 3 suffices for this, always.