For those of you not using Direct3D 9 or XNA, you can safely ignore this post (OpenGL and Direct3D 10 are immune to this particular oddity). However, if you are, it’s likely that you’ve had to deal with the dreaded half-texel offset. Today, after I don’t know how many years of using Direct3D, I came to realize that I really didn’t understand what the source of the issue was. Now that I’ve sort of gotten a handle on it, I figured I’d post it to my super new journal. Consider it a test run.

Coordinate Spaces

The first thing to note is the basic coordinate space. I’m going to be referring to texture space and clip space a lot, so I thought I’d just do a quick refresher here on what I mean (mostly to make sure you’re thinking with the same terminology that I’m using).

- Clip space – the post-projection half-cube of space where X,Y in [-1..1] and Z in [0..1]

- X = -1 is the far left edge of the screen

- X = 1 is the far right edge

- Y = -1 is the bottom edge

- Y = 1 is the top

- Z = 0 is near (the near plane)

- Z = 1 is far (the far plane)

- Texture space – the area where u,v in [0..1] on a texture map making up a single end-to-end repeat of the texture.

- 0,0 represents the upper-left coordinate on the texture

- 1,1 is the lower-right.

For simplification, we can ignore the Z direction in clip space, since this article is really about the screen-space aspects of it.

Texture Sampling

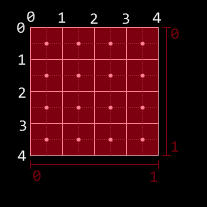

Texture sampling is pretty straightforward.

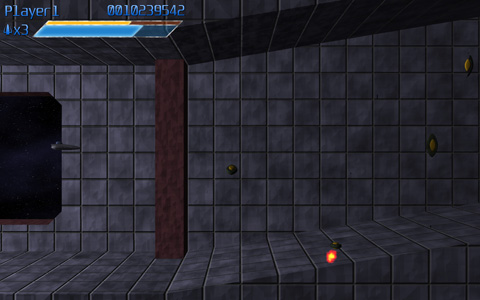

In this diagram (and future diagrams), I’m using a 4×4 texture. The [0..1] range in both X and Y map exactly to the range of the texture. Note, here, that the center of the upper-left texel does not lie at 0,0; instead, 0,0 refers to the upper-left corner of that texel. This is an important thing: if you are using bilinear filtering and you want to sample color data from a single texel, you need to sample from the center of the texel instead of the corner. To do that, you’d sample from a half-texel in (that is, for this 4×4 texel, you’d want to sample from (0.125, 0.125), because a texel’s width in texture space is .25 (each texel is 1/4 of texture space), and half of that is 0.125).

A side effect of this is that, if you have bilinear sampling and wrapping on, sampling along the left edge gives you exactly the same data as sampling along the right edge at the same y coordinate. That is, in both cases, you get a perfect blend of the texels on either end of the texture. This is true for the borders between texels in general: sampling where the “dot” is on the image above is the only way to get a pure sample of a texel with bilinear filtering. Anywhere else will be a blend between two. Edges (the lines) are where the two texels neighboring the edge are weighted evenly.

The upshot of this is that the official center of a texel is located where the center SHOULD be: half of a texel from the edge. However, as we’ll see in a moment, the rule for sampling texels does not apply to pixels.

Pixel Coordinates Are Weird And Unintuitive

When referring to “pixel coordinates” vs. “texel coordinates,” pixel coordinates are, for lack of a better way to explain it, the coordinates used when rendering to the screen (or a render target). The pixels are the destination coordinates.

This is where clip space comes in: When you render your geometry, it goes through the gauntlet of matrices, ending with the projection matrices, and ends up in clip space (with x and y both being in [-1..1] for the visible area of the screen).

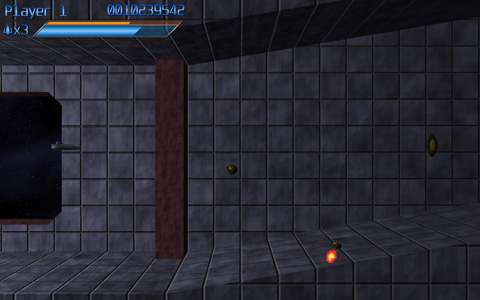

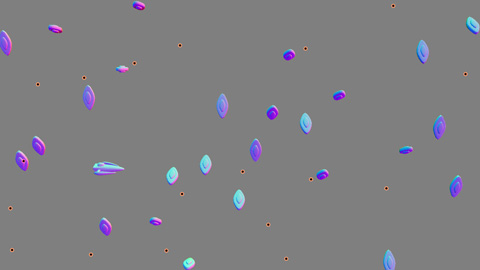

![]()

This diagram is the way that clip space maps to pixels. note that the upper-left clip space coordinate (-1, +1) is actually RIGHT ON the pixel center. This is the root cause of the whole offset problem.

Basically, say you have a screen-sized texture. if you draw it to the screen using a full-screen quad (clip space from upper-left (-1,1) to lower-right (1, -1)), the upper-left pixel would sample the very upper-left corner of your quad, which would give you texture coordinates of (0,0). But remember: that’s not the center of the texel! It’s actually the upper-left corner of the texel. With bilinear filtering, instead of getting a pure sample of your texture, you have a perfect blend of all 4 surrounding texels.

In simpler terms: the half-pixel/half-texel offset exists because there’s a discrepancy between how the centers of pixels and the centers of texels are computed.

To fix this, you could simply offset your texture coordinates by a half-texel before sampling (that is, when your shader gets its uv coordinate, you’d add a half texel ( (.125, .125) for our 4×4 example) before sampling, which would then give you a perfectly lined-up texture sample, and you’d get a great 1:1 mapping of pixels to texels.

However, there’s another problem. What happens when you’re using multisample anti-aliasing (MSAA)?

Weird Pixel Centers and MSAA

So, we now know that the center of the origin pixel is actually the upper-left coordinate of clip space. This causes problems when MSAA is turned on. With MSAA, the geometry is sampled multiple times per pixel, using a set of points that exist within the pixel’s square (while a pixel is not really a square, but a point sample within a square, MSAA effectively treats a pixel as multiple samples in a square).

As an (important) aside: with MSAA on, textures are still only sampled once, from the pixel center. MSAA does not affect the UV coordinates you get for a given pixel, it merely affects geometry coverage.

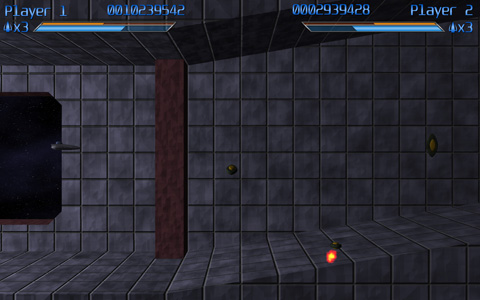

Continuing with our example, here’s a hypothetical, simple MSAA strategy wherein a pixel is sampled four times (4x MSAA) in an aligned grid:

![]()

This diagram illustrates the issue with the standard [-1..1] full screen quad, D3D’s choice of pixel center, and MSAA. Even with a full-clip-space (and, thus, traditionally full-screen) quad, along the top and left edges, some samples no longer touch the quad, and thus the edges are not entirely filled in (a pure white quad drawn to a render target that had been cleared to black would have grey edges along the top and left).

You’ve likely seen this in a ton of games: when you turn on full-screen anti-aliasing, there’s a weird line down the side of the screen during fadeouts and the like. This is the pixel offset problem rearing its head.

How do you fix this? Turns out, it’s simple. When drawing a full-screen quad, instead of adding a half-texel to the uv coordinates, you should instead SUBTRACT a half-pixel from the position (not the uv coordinates). This shifts the quad so that it lies perfectly within the grid in the diagram, so that every MSAA sample hits the geometry. As an added bonus, it means that the UV samples that you get will already be in the texel centers; no more adding a half-texel required!

Note, that when I say “subtract a half-pixel from position,” what I mean is “move the position of the quad one half-pixel towards the upper-left. To do this, you actually add (-1/width, +1/height). The reason for the signs (-, +) is that you’re moving left and up. In post-projection space, -x is left, and +y is up. The reason that it’s 1/width instead of 0.5/width is because, in clip space, the coordinates range from -1 to 1 (width 2), and not from 0 to 1 (width 1) like they do in textures, so you need to double the movement to account for that.

Of course, if you’re drawing world geometry and using its screen coordinates to index into a texture map (like, for instance, if you’re using light pre-pass rendering), you’ll still want to add the half-texel instead. In fact, here is a great reference on how to handle this case.

Take Us Home, Article

The half-texel/half-pixel offset is a bizarre feature in Direct3D 9 (and, by extension, XNA). In order to properly handle it when using full-screen-sized textures:

- When rendering a full-screen (or otherwise screen-aligned quad), subtract a half-pixel’s size from the output vertex position from your vertex shader. This will ensure both that the texel centers line up with the pixel centers (for proper texture sampling) AND that the quad will play nice along the left and top edges of the screen with MSAA.

- When rendering normal geometry, the geometry is already in the proper place (i.e. it already plays fine with MSAA). Consequently, you should add a half-texel to the uv coordinates for your full-screen sample. This will allow you to sample from the texel centers as desired.

While this article refers mostly to full-screen effects, this information is generally more useful when downsampling textures, as you need to know where your sample points on the source texture will actually hit when rendering (and you’ll want bilinear filtering for, for instance, a 4-tap 4×4 average downsample).

Hopefully, if you were as confused by the half-texel offset as I was, this helps clear things up.

Additional references from MSDN: