In my previous two posts, I started looking into what it would take to code the networking for my game, and came up with a first draft, before realizing that floating-point discrepancies between systems totally threw my lockstepping idea for a loop.

Lockstepping With Collisions

In order to solve the issue with different systems having different floating-point calculation results, I decided to somewhat revamp the network design, and really leverage the fact that I don’t care so much about cheating – you could never get away with a networking scheme like this in a competetive game.

- First, the timing of the scrolling, player bullet fire rates, enemy fire rates, etc were all modified to be integer-based instead of float-based. There are no discrepencies in the way that integer calculations happen from machine to machine, so the timings of things like enemy spawning, level scrolling, etc. are now all perfectly in sync, frame by frame.

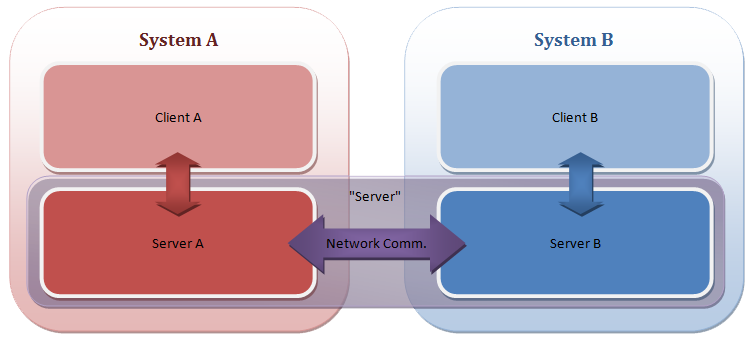

- Next, when the client detects a collision between entities, it sends a message to the server (which, you may recall, is running on the same system as the client – each machine gets one) notifying it of a collision. These messages are also synced across the network.

- Thus, whenever an enemy does on any one client, it dies on both servers on the same tick (that is, the “first” client relative to the game clock to detect a collision determines when the collision took place). This means that there are no longer any timing differences between deaths on each server (and if a collision happens to be missed by one client, but hits on the other, it will eventually reach the other client).

- Because players were already sending “I died!” flags as part of their network packets, these were already always perfectly in sync, so no change was needed there.

As an added bonus, since all collision detections are now handled by the client (and communicated to the server), the server never has to do any collision detection calculations on its own, which eases up on the CPU load somewhat (previously, the client and server were both doing collision calculations). All the server has to do now is apply collisions reported by either the local client or the remote client.

So now, the actual mechanism by which the game keeps in sync from system to system is set, but how does it handle the three main enemies of the network programmer?

Network Gaming’s Most Wanted #1 – Latency

“Lag” is one of the most dreaded words in the network gaming world. It’s always going to be present – nothing can communicate across the internet faster than the speed of light (and, because of transmission over copper, it’s really more like a sluggish 2/3rds the speed of light!). Routers and switches also add their own delays to the mix. According to statistics gathered by Bungie from Halo 3’s gameplay, most gamers (roughly 90%)end up with a round-trip latency of less than (or equal to) 250ms. That is, it takes a quarter of a second for data to go from System A to System B and back to A. That’s a long time for a fast-action networked game! Thankfully, because messages sent from system to system in this game’s network design are never dependent on messages from the other, nothing has to round trip, so the latencies can effectively be halved, making the system much better at handling lag (because, quite frankly, there’s just less of it!)

As discussed previously, because the client can run ahead of the server and, thus, process local player input immediately, there’s no latency in what the player presses and what actually happens on-screen. But what about how the remote player’s actions look? With a ping under 100ms, there are next to zero visible discrepancies on the system. That is, low-ping games are virtually indistinguishable from locally-played games.

At around the 400ms ping mark, it does start to become obvious that things aren’t quite right – due to the interpolation of remotely-shot bullets, they accelerate up to a certain part of the screen until they reach their known location then slow down to normal speed, which is fairly noticeable (I’m still trying to smooth this out a touch). When enemies get too close to the remote player as it fires, due to the delay, the bullets will collide with the enemy, but the enemy will live longer than it appears it should (because the local client does not reliably know that the remote bullet is actually still alive, it can’t deal actual damage to the enemy, it has to wait for the server to confirm).

Above 1-2 seconds of latency, all bets are off – the local player will find the game still perfectly playable, but the movements of the remote player will be completely erratic, and remotely fired bullets won’t act at all like they should. But, since 90% of gamers have much lower latencies, this is not really an issue. For the majority of gamers, the game will look and play pretty close to how it would if both players were in the same room.

Network Gaming’s Most Wanted #2 – Packet Loss

Latency’s lesser-known brother is packet loss, which is where data sent from one machine to another never makes it (due to routing hardware failure, power outage, NSA interception, alien abduction, etc). On a standard internet connection, you can generally assume that about 10% of the packets that you send will get lost along the way. Also, just because you send Packet A before Packet B doesn’t mean they’ll arrive in the right order – a machine might get a packet sent later before one that was sent earlier.

With the XNA runtime, there are four different methods that you can send packets with (obviously you can mimic these with any networking setup, but I’m using XNA so it’s my frame of reference here):

- Unreliable – the other system will get these in potentially any order, or it may not even get them at all. The name says it all – you can’t rely on these packets. This is probably not the best option to use.

- In-Order – These packets are for data for which you really only need the most recent data; you only care about the most recent score, for instance – not what the score was in a previous packet. Thus, these packets contain extra version information so that the XNA runtime can ensure that packets that arrive out of order don’t reach you. As soon as a new packet comes in, it becomes available to the game. If a packet that’s older than the most recent one comes in, it’s discarded. You immediately get new ones at the cost of never getting older ones. For many games, this is a perfect scenario.

- Reliable – These packets will always arrive. When the XNA runtime receives one of these, it sends an acknowledgement to the other system that it received it. If the system that sent it doesn’t receive such an acknowledgement, it’ll resend (and resend and resend and…) until it finally arrives at the destination. Packets sent reliably are not vulnerable to packet loss; if you send it, as long as the connection remains valid you know it will reach the destination eventually. However, these packets may not arrive in the proper order (you may receive Packet C before Packets A or B).

- Reliable, In-Order – On the surface, this sounds like the best choice! These packets always arrive in the right order, and they always arrive! That is, you will always get Packets A, B, C, and D, in that order. There’s a hidden downside, though: If the game recieves Packet C, but has not yet received Packets A or B, it has to hold onto that packet until both A and B arrive, which, if they need to be resent, can really ratchet up the latency. Any one packet that needs to be resent will hold up the whole line until it arrives. Clearly, this type of packet should only be used when absolutely necessary – for normal gameplay, it’s better to use In-Order or Reliable on their own.

Eventually, I decided to send packets in the Reliable way, but not In-Order. But, to minimize the amount that the game has to wait for resent packets to arrive, each packet contains eight frames worth of input/collision data. That way, as long as one out of every string of 8 packets arrives, the server will have all of the relevant information to sync up to that point. And if, for some reason, 8 packets in a row are all lost in transmission, they’ll be resent and make it eventually anyhow.

To handle this, the game essentially has a list of frames that it’s received data for (8 of which come in with each packet).

- For each frame that a packet contains, if the frame already been simulated by the server (a frame from the past), that frame is ignored.

- Similarly, if the frame is already in the list, it’s ignored.

- If it’s not a past frame and it isn’t already in the list, add it to the list (in order – the list is sorted from earliest to latest).

- After this is done, if the next frame that the server needs to simulate is in the list, remove it from the list and go! Otherwise, wait until it is.

The game doesn’t care which order the frames are received in – as long as it has the next one in the list, it’ll be able to continue on. Because of the redundancy, it rarely has to wait on a resend due to packet loss. In fact, using XNA’s built-in packet loss simulation (thank you, XNA team!), the packet loss has to be increased to over 90% before the latency of the simulation starts to increase (hypothetically, the magic number is above 87.5% packet loss – greater than 7/8 packets lost).

The disadvantage of this system is that it does add to the bandwidth use, as each packet now contains an average of eight times as much data as it would normally, which brings us to…

Network Gaming’s Most Wanted #3 – Bandwidth

Ah, bandwidth. There’s no point in having a low-latency connection between two systems if the game requires too much bandwidth for the connection to keep up. Because the Xbox Live bandwidth requirement is 8KB/s (that’s kilobytes), that became my goal as well.

This is where I overengineered my system a bit. I was estimating, as a worst case, an average of 10 collisions per frame. With packet header overhead, voice headset data, and everything, with 8 frames worth of data in each packet, I expected to be just BARELY below the 8KB/s limit.

When I finally got the system up and running, it turned out the game was using less than 4KB/s. The average amount of collisions per frame is closer to TWO than it is to 10 (unfunny side note: I had the right number, but the wrong numerical base. My answer was perfect in binary), even with a lot of stuff going on (though an individual frame may have many more, there are usually large spaces between collisions as waves of bullets smack into enemies). The most I’ve been able to get it up to with this system is about 5KB/s, which means the game still has a delightful 3KB/s of breathing room. I think I’ll keep it that way!

Final Remarks

Hopefully this has been an informative foray into the design of a network protocol for an arcade-style shoot-em-up game. I’m no network professional – in fact, this is the first network design I’ve ever done, so I’m sure people who do this stuff for a living are laughing at my pathetic framework. If anyone has any suggestions as to how I might improve my network model, I’m all ears – while it works pretty well, I’m always open to ideas!

Now that looks a little more like lightning! Well..at least the shape of it. But what about the rest?

Now that looks a little more like lightning! Well..at least the shape of it. But what about the rest?